I am trying to create BERT embeddings of text data, then use dimensionality reduction and cluster. I tried with some big datasets like amazon reviews and 20newsgroups, but whenever I created embeddings the classes were always overlapped and didn't form clear clusters, so using any clustering algorithm would have been useless.

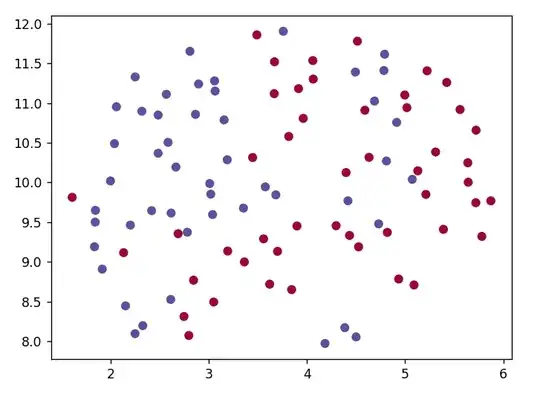

To try and understand what the problem is I created a smaller dataset consisting of 50 positive and 50 negative sentences. The list 'texts' in encoded_texts is the dataset. The picture contains the plot of the embedded texts. I have tried with different umap parameters and still no good result. I also ran K-Means on the not yet embedded data and never got an accuracy over 0.5. How can I improve my embeddings such that clusters are formed? Do you have any ideas on what I could test to better understand where the problem lies?

tokenizer = BertTokenizer.from_pretrained('bert-large-uncased')

encoded_texts = tokenizer.batch_encode_plus(

texts,

max_length=500,

add_special_tokens=True,

return_token_type_ids=False,

padding='longest',

return_attention_mask=True,

return_tensors='pt'

)

input_ids = encoded_texts['input_ids']

attention_masks = encoded_texts['attention_mask']

model = BertModel.from_pretrained('bert-large-uncased')

outputs = model(input_ids, attention_mask=attention_masks)

embeddings = outputs['last_hidden_state']

data = umap.UMAP(n_neighbors=50, min_dist=0.1).fit_transform(embeddings.detach().numpy().reshape(len(texts), -1))

n_clusters = 2

kmeans = KMeans(n_clusters=n_clusters, n_init=30)

kmeans.fit(data)

pred_labels = kmeans.labels_

accuracy = accuracy_score(labels, pred_labels)

print(f"Accuracy: {accuracy}")

plt.scatter(data[:, 0], data[:, 1], c=labels, cmap='Spectral')

plt.show()