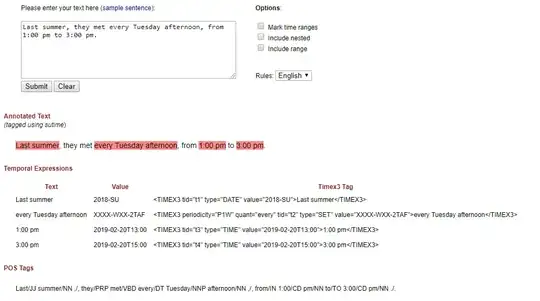

I would like to extract all date information from a given document. Essentially, I guess this can be done with a lot of regexes:

- 2019-02-20

- 20.02.2019 ("German format")

- 02/2019 ("February 2019")

- "tomorrow" (datetime.timedelta(days=1))

- "yesterday" (datetime.timedelta(days=-1))

Is there a Python package / library which offers this already or do i have to write all of those regexes/logic myself?

I'm interested in Information Extraction from German and English texts. Mainly German, though.

Constraints

I don't have the complete dataset by now, but I have some idea about it:

- 10 years of interesting dates which could be in the dataset

- I guess the interesting date types are: (1) 28.02.2019, (2) relative ones like "3 days ago" (3) 28/02/2019, (4) 02/28/2019 (5) 2019-02-28 (6) 2019/02/28 (7) 2019/28/02 (8) 28.2.2019 (9) 28.2 (10) ... -- all of which could have spaces in various places

- I have millions of documents. Every document has around 20 sentences, I guess.

- Most of the data is in German