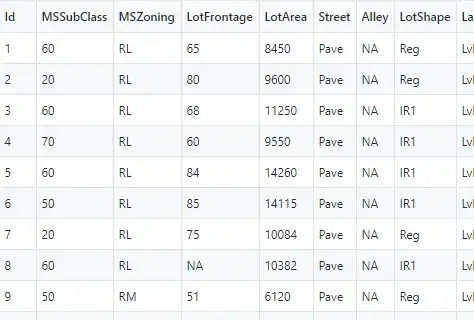

I am currently working on the Boston problem hosted on Kaggle. The dataset is nothing like the Titanic dataset. There are many categorical columns and I'm trying to one-hot-encode these columns. I've decided to go with the column MSZoning to get the approach working and work out a strategy to apply it to other categorical columns. This is a small snippet of the dataset:

Here are the different types of values present in MSZoning, so obviously integer encoding only would be a bad idea:

['RL' 'RM' 'C (all)' 'FV' 'RH']

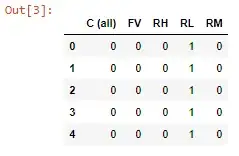

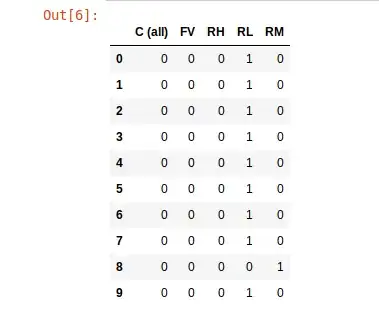

Here is my attempt on Python to assign MSZoning with the new one-hot-encoded data. I do know that one-hot-encoding turns each value into a column of its own and assigns binary values to each of them so I realize that this isn't exactly a good idea. I wanted to try it anyways:

import pandas as pd

from sklearn.preprocessing import LabelEncoder, OneHotEncoder

train = pd.read_csv("https://raw.githubusercontent.com/oo92/Boston-Kaggle/master/train.csv")

test = pd.read_csv("https://raw.githubusercontent.com/oo92/Boston-Kaggle/master/train.csv")

labelEncoder = LabelEncoder()

train['MSZoning'] = labelEncoder.fit_transform(train['MSZoning'])

train_OHE = OneHotEncoder(categorical_features=train['MSZoning'])

train['MSZoning'] = train_OHE.fit_transform(train['MSZoning']).toarray()

print(train['MSZoning'])

Which is giving me the following (obvious) error:

C:\Users\security\Anaconda3\lib\site-packages\sklearn\preprocessing\_encoders.py:392: DeprecationWarning: The 'categorical_features' keyword is deprecated in version 0.20 and will be removed in 0.22. You can use the ColumnTransformer instead.

"use the ColumnTransformer instead.", DeprecationWarning)

Traceback (most recent call last):

File "C:/Users/security/Downloads/AP/Boston-Kaggle/Boston.py", line 11, in <module>

train['MSZoning'] = train_OHE.fit_transform(train['MSZoning']).toarray()

File "C:\Users\security\Anaconda3\lib\site-packages\sklearn\preprocessing\_encoders.py", line 511, in fit_transform

self._handle_deprecations(X)

File "C:\Users\security\Anaconda3\lib\site-packages\sklearn\preprocessing\_encoders.py", line 394, in _handle_deprecations

n_features = X.shape[1]

IndexError: tuple index out of range

I did read through some Medium posts on this but they didn't exactly relate to what I was trying to do with my dataset as they were working with dummy data with a couple of categorical columns. What I want to know is, how do I make use of one-hot-encoding after the (attempted) step?