Please refer "Pattern Recognition and Machine Learning" - Bishop, page 182.

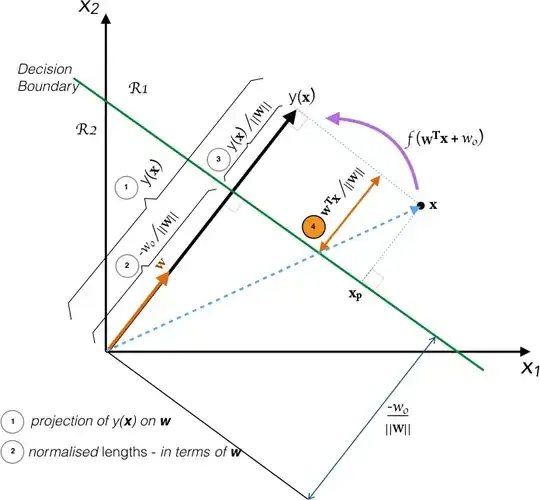

I am struggling to visualize the intuition behind equations 4.6 & 4.7. I am presenting my understanding of section 4.1.1 using the diagram:

Pls. Note: I have used $x_{\perp}$ and $x_{p}$ interchangeably.

Equations 4.6, 4.7 from book: $$\mathbf{x} = \mathbf{x_{\perp}} + \textit{r}\mathbf{\frac{w}{\Vert{w}\Vert}} \tag {4.6}$$ Muiltiplying both sides of this result by $\mathbf{w^{T}}$ and adding $w_{0}$, and making use of $y(\mathbf{x}) = \mathbf{w^{T}x} + w_{0}$ and $y(\mathbf{x_{\perp}}) = \mathbf{w^{T}x_{\perp}} + w_{0} = 0$, we have $$r = \frac{y(\mathbf{x})}{\Vert{\mathbf{w}}\Vert} \tag{4.7}$$

Questions:

- Is $y(\mathbf{x})$ the (orthogonal) projection of $(\mathbf{w^{T}x} + w_{0})$ along $\mathbf{w}$, the weight vector?

- Are the lengths normalized to express them as multiples of unit vector $\mathbf{\frac{{w}}{\Vert{w}\Vert}}$. If so, can the distance $r = $$\frac{y(\mathbf{x})}{\Vert\mathbf{w}\Vert}$ exceed 1.

- Given that, $$ y(\mathbf{x}) = \mathbf{w^{T}x} + w_{0}$$ i.e. $y(\cdot)$ has two parts: $\textit{orthogonal component above/ below}$ decision boundary, and the $\textit{bias}$. And so, I'm calculating $y(\cdot)$ as:

$$y(\mathbf{x}) = \mathbf{\frac{w^{T}x}{\Vert{w}\Vert}} + \frac{w_{0}}{\Vert\mathbf{w}\Vert}$$ while the book gets it as: $$y(\mathbf{x}) = {\frac{y(\mathbf{x})}{\Vert\mathbf{w}\Vert}} + \frac{w_{0}}{\Vert\mathbf{w}\Vert}$$ I am struggling to visualize how do we get the first term in the equation above (in book, eqn. 4.7)

Alternatively, presenting my doubt/argument w.r.t. to book eqns 4.6 & 4.7; by substituting $r$ (eq. 4.7) into eq. 4.6 we get: $$\mathbf{x} = \mathbf{x_{p}} + y(\mathbf{x}) \qquad {(\Vert{\mathbf{w}}\Vert^{2} = \mathbf{w})}$$

which again seems to be incorrect - by triangle rule of vector addition.

Given the context, where am I losing track? Request your inputs.