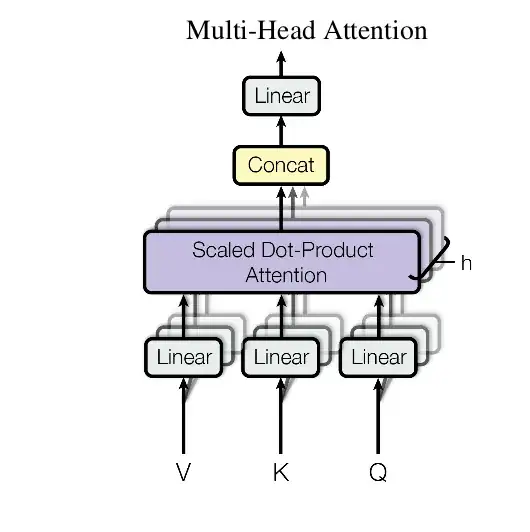

My question regards this image:

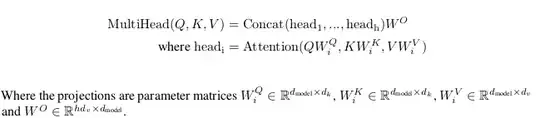

It seems that after the multi head attention there is a linear layer as they mention also from here:

the linearity is given by the weights W^{o}. my quesion is: for the decoder, doesn't this linear layer mess up with the masking of the attention? I mean, if each token from the attention layer should not depend from the next tokens then these weights seem to mess up the whole thing or they don't? Indeed these linear weights will learn the dependencies among all the tokens and during inference there could be a problem, maybe(?). Thanks for any clarification.