I apologise if this is a bit long winded, but it was suggested by another user that I post.

I will start by saying that I am very new to the world of machine learning and deep learning. As such, the most important thing I am after is the understanding of what I am doing.

I am trying to build an ANN for binary classification.

I have a binary feature matrix in the form of N x D, where N is number of samples and D is number of features. In my dataset N has a max of ~ 2 million - but I run my testing on ~ 500k due to the time it takes to run (even when utilising my GPU). If I get a promising validation curve after testing, I will run on the full dataset to verify. D in my dataset is 5. Thus, I have a feature matrix of the form 500000 x 5. A snippet below:

[[0 1 0 1 1]

[0 1 1 0 1]

[0 0 1 0 1]

[1 1 0 1 1]

[1 1 0 0 0]

[0 1 1 0 1]

[0 1 0 0 0]

[1 1 0 1 1]

[0 0 0 1 1]

[1 0 0 0 1]]

I have a target matrix in binary form, snippet below:

[1 0 1 1 0 0 1 0 1 1]

Based on my understanding, for binary classification, the Input layer should be the same as D, and your output layer should have 1 node and it should have a sigmoid activation function.

Thus, I have taken this approach. Now, I also understand that machine/ deep learning is a lot of experimentation and so I have gone through many different iterations of changing the number of hidden layers, as well as changing the number of nodes per hidden layer - all to no seemingly obvious benefit. I have also played around with the following: learning rate (for Adam optimiser), train to test ratio (currently at 0.33), random state variable (when splitting the dataset - currently at 42), batch size (currently at 128), epochs (currently at 50). All of this experimentation with these variables still lead to a high validation loss, as I will show further below.

Now, for my code. Below is my code to split the data in to train and test.

train_to_test_ratio = 0.33

random_state_ = 42

# split the data into train and test sets

# this lets us simulate how our model will perform in the future

x_train, x_test, y_train, y_test = train_test_split(X, Y, test_size=train_to_test_ratio, random_state=random_state_)

N, D = x_train.shape

Below is my code to to build the model. You can see I currently have 3 hidden layers. I have played around this a lot - from 1 hidden layer to 10 with varying nodes per layer. The best I have found is the below 3 hidden layers. The learning rate is currently 0.001 which creates the best loss curve - anything bigger is too high.

learning_rate = 0.001

# build the model

i = Input(shape=(look_back_period,))

x = Dense(8, activation="relu")(i)

x = Dense(16, activation="relu")(x)

x = Dense(32, activation="relu")(x)

#x = Dense(32, activation="relu")(x)

#x = Dense(64, activation="relu")(x)

#x = Dense(128, activation="relu")(x)

#x = Dense(64, activation="relu")(x)

#x = Dense(32, activation="relu")(x)

#x = Dense(16, activation="relu")(x)

#x = Dense(4, activation="relu")(x)

x = Dense(1, activation="sigmoid")(x)

model = Model(i, x)

model.compile(optimizer=Adam(lr=learning_rate), loss="binary_crossentropy", metrics=["accuracy"])

I then train the model:

# train the model

# trains on first half of dataset, and tests on second half

b_size = 128

iterations = 50

r = model.fit(x_train, y_train, validation_data=(x_test, y_test), epochs=iterations, batch_size=b_size)

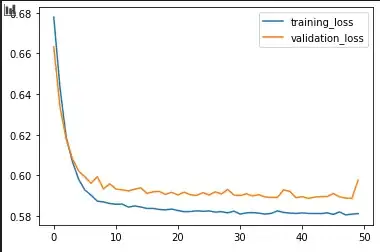

Here is the validation curves:

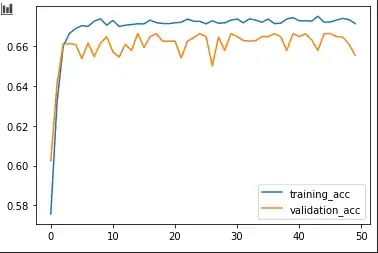

Here is the accuracy curves:

As you can see the validation loss is very high, and the accuracy is not too high, which to me the model is failing.

What do these outputs mean? In the sense of with all the experimentation I still cannot get the validation loss low with high accuracy. This to me is that the model is incorrect. But what would be the correct model? Is there any advice that can be given to better understand how to move forward and build a better model?