I have run a lighgbm regression model by optimizing on RMSE and measuring the performance on RMSE:

model = LGBMRegressor(objective="regression", n_estimators=500, n_jobs=8)

model.fit(X_train, y_train, eval_metric="rmse", eval_set=[(X_train, y_train), (X_test, y_test)], early_stopping_rounds=20)

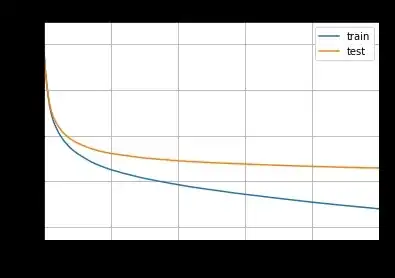

The model keeps improving during the 500 iterations. Here are the performances I obtain on MAE:

MAE on train : 1.080571 MAE on test : 1.258383

But the metric I'm really interested in is MAE, so I decided to optimize it directly (and choose it as the evaluation metric):

model = LGBMRegressor(objective="regression_l1", n_estimators=500, n_jobs=8)

model.fit(X_train, y_train, eval_metric="mae", eval_set=[(X_train, y_train), (X_test, y_test)], early_stopping_rounds=20)

Against all odds, the MAE performance decreases both on train and test:

MAE on train : 1.277689 MAE on test : 1.285950

When I look at the model's logs, it seems stuck in a local minimum and doesn't improve after about 100 trees... Do you think that the problem is linked with the non-differentiability of MAE?

Here are the learning curves: