I am reading a presentation and it recommends not using leave one out encoding, but it is okay with one hot encoding. I thought they both were the same. Can anyone describe what the differences between them are?

Asked

Active

Viewed 1.3k times

19

-

1It's not clear (from just your question) what leave-on-out even is. You should edit this to give a pointer and explain briefly your understanding of the two, and why you think they are the same. – Sean Owen Mar 23 '16 at 13:31

-

[leave one out, from scikit learn contrib categorical project](https://contrib.scikit-learn.org/categorical-encoding/leaveoneout.html) – mork Mar 18 '19 at 08:34

-

OHE and LOO are #2 and #10 in [11 Categorical Encoders and Benchmark](https://www.kaggle.com/code/subinium/11-categorical-encoders-and-benchmark/notebook#10.-Leave-one-out-Encoder-(LOO-or-LOOE)) respectively. – smci Apr 21 '22 at 07:20

1 Answers

20

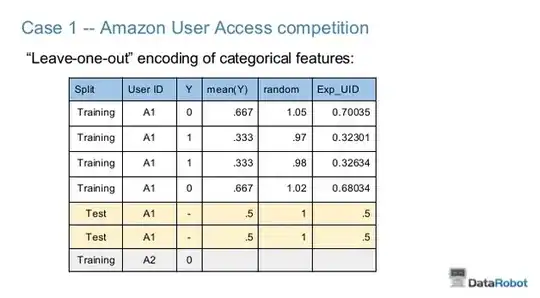

They are probably using "leave one out encoding" to refer to Owen Zhang's strategy.

From here

The encoded column is not a conventional dummy variable, but instead is the mean response over all rows for this categorical level, excluding the row itself. This gives you the advantage of having a one-column representation of the categorical while avoiding direct response leakage

Zephyr

- 997

- 4

- 10

- 20

Dex Groves

- 386

- 1

- 4

-

1Your explanation is better than wacax's in the referred link, thank you – Allan Ruin Aug 12 '16 at 15:00

-

Hi @Dex Groves, so the leave_one_out encoding for the test is always .5? – user7117436 Mar 24 '17 at 20:29

-

3Hi! As seen from the picture, this paticular example relates to classification problem. Does anybody have an experience with LOO encoding within regression problem? The main question is how to aggregate the target variable. I am now making experiments and get huge overfitting with mean(y). – Alexey Trofimov Jun 19 '17 at 12:49

-

1for a clustering (unsupervised) problem, is possible to use this kind of encoding? – enneppi Sep 13 '18 at 10:26

-

@AlexeyTrofimov - try an aggregation with a lower variance. I'd start with different binning (like 1K, 2K, 2M, .. for large y int values, or some rounding to a decimal place for y float values) => mean(bin_f(y)) – mork Mar 18 '19 at 08:40

-

1@enneppi - the whole idea is to "tie" your categorical feature to the target "y", which you're missing in your unsupervised ML. You could try "tying" your categorical feature into other X features (a kind of feature engineering) – mork Mar 18 '19 at 08:46