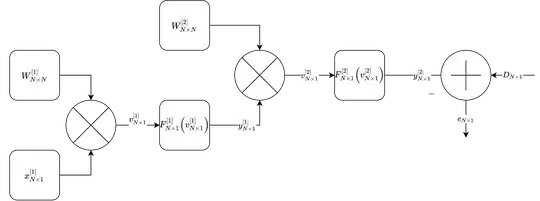

I have some problems for computing derivative for sum of squares error in backprop neural network. For example, we have a neural network as in picture. For drawing simplicity, i've dropped the sample indexes.

Сonventions:

- x - data_set input.

- W - is a weigth matrix.

- v - vector of product: W*x.

- F - activation function vector.

- y - vector of activated data

- D - vector of answers

- e - error signal

- lower index is a variable(NxN) - dimenstionality

- higher [index] - is a layer number.

Jacobian is defined as:

\begin{pmatrix}\frac{\partial f_1(x_1)}{\partial x_1}&\dots&\frac{\partial f_1(x_N)}{\partial x_N}\\\vdots&\ddots&\vdots\\\frac{\partial f_M(x_1)}{\partial x_1}&\dots&\frac{\partial f_M(x_N)}{\partial x_N}\end{pmatrix}Let's have a look at the second layer and let's find derivative according chain rule:

Optimization error is sum of squared errors:

The chain rule for second layer is :

I'm going to derivate according rules founded here: https://fmin.xyz/docs/theory/Matrix_calculus/

- NxN matrix

- NxN matrix

- NxN matrix (look at Jacobian)

- NxN matrix (look at Jacobian)

- Nx2N Tensor product (try yourself for 2x2)

- Nx2N Tensor product (try yourself for 2x2)

So the differential is:

(You can verify equations according this service http://www.matrixcalculus.org/ or yourself using 2x2 dimension)

Let' look at dimenstions: (1xN) x (NxN) x (NxN) x (Nx2N) = (1x2N)

When we try to use gradient descent

- The dimenstions will not be the same: (NxN) = (NxN) - (1x2N)

Therefore, we cannot update the coefficients according this.

Where did i make a mistake? Any comments are welcomed. Thanks!