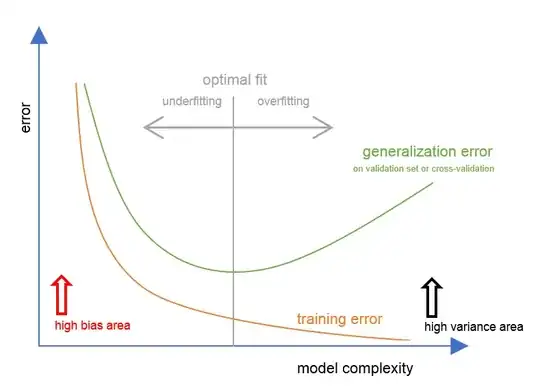

What is the exact relation between "underfitting" vs "high bias and low variance". They seem to be tightly related concepts but still 2 distinct things. What is the exact relation between them?

Same for "overfitting" vs "high variance and low bias". The same, but not exactly?

e.g.

Wikipedia states that:

"The bias error is an error from erroneous assumptions in the learning algorithm. High bias can cause an algorithm to miss the relevant relations between features and target outputs (underfitting). The variance is an error from sensitivity to small fluctuations in the training set. High variance may result from an algorithm modeling the random noise in the training data (overfitting)."

Kaggle says:

"In a nutshell, Underfitting – High bias and low variance"

"In simple terms, High Bias implies underfitting"

or another question from Stack exchange:

"underfitting is associated with high bias and over fitting is associated with high variance"