Imagine we have a model in the sklearn pipeline:

# pipeline for numerical data

num_preprocessing = Pipeline([('num_imputer', SimpleImputer(strategy='mean')), # imputing with mean

('minmaxscaler', MinMaxScaler())]) # scaling

# pipeline for categorical data

cat_preprocessing = Pipeline([('cat_imputer', SimpleImputer(strategy='constant', fill_value='missing')), # filling missing values

('onehot', OneHotEncoder(drop='first', handle_unknown='ignore'))]) # One Hot Encoding

# preprocessor - combining pipelines

preprocessor = ColumnTransformer([

('numerical', num_preprocessing, num_cols),

('categorical', cat_preprocessing, cat_cols)

])

We optimize the hyperparameters with Optuna (It's a CatBoost model but the topic is related to any algorithmns that use early stopping):

# initial params

cb_ini_params = {'learning_rate': 0.07971583985000945,

'depth': 8,

'verbose': False,

'boosting_type': 'Plain',

'n_estimators': 10,

'bootstrap_type': 'MVS',

'colsample_bylevel': 0.09968684497419562,

'objective': 'Logloss'}

def objective(trial):

# parameters

params = {

'objective': trial.suggest_categorical('objective', ['Logloss', 'CrossEntropy']),

'colsample_bylevel': trial.suggest_float('colsample_bylevel', 0.01, 0.1),

'depth': trial.suggest_int('depth', 1, 10),

'learning_rate': trial.suggest_loguniform('learning_rate', 1e-5, 1e-1),

'boosting_type': trial.suggest_categorical('boosting_type', ['Ordered', 'Plain']),

'n_estimators': trial.suggest_int('n_estimators', 10, 1000),

'bootstrap_type': trial.suggest_categorical(

'bootstrap_type', ['Bayesian', 'Bernoulli', 'MVS']

),

'used_ram_limit': '32gb',

}

if params['bootstrap_type'] == 'Bayesian':

params['bagging_temperature'] = trial.suggest_float('bagging_temperature', 0, 10)

elif params['bootstrap_type'] == 'Bernoulli':

params['subsample'] = trial.suggest_float('subsample', 0.1, 1)

# model

clf_pipe = Pipeline([('preprocessor', preprocessor),

('smote', SMOTE(random_state=42)),

('clf', CatBoostClassifier(**params))])

# cv

cv = StratifiedKFold(n_splits=10, shuffle=True, random_state=42)

# function

return cross_val_score(clf_pipe, X_train, y_train, n_jobs=-1, cv=cv, scoring='accuracy').mean()

study = optuna.create_study(direction='maximize')

study.enqueue_trial(cb_ini_params)

study.optimize(objective, n_trials=100)

As we use the Pipeline we need to adjust the X_test dataset:

# numeric labels

nums = log_clf.named_steps['preprocessor'].transformers_[0][2]

# categorical labels

cats = log_clf.named_steps['preprocessor'].transformers_[1][1]['onehot'].get_feature_names_out(cat_cols).tolist()

# labels

feats = nums + cats

# transformed X_test

X_test_transformed = pd.DataFrame(cb_clf.named_steps['preprocessor'].transform(X_test), columns=feats)

In order to avoid overfitting, we now use the eval_set to find the best iteration:

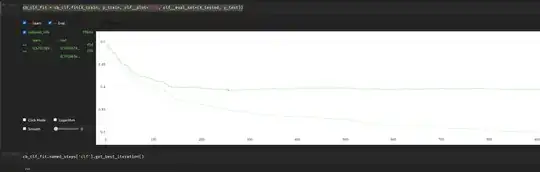

cb_clf_fit = cb_clf.fit(X_train, y_train, clf__plot=True, clf__eval_set=(X_test_transformed, y_test))

And get:

My question is... what next? What is the proper way to train the final model incorporating the information from the best iteration? Should I just run:

final_cb = cb_clf.fit(X, y, clf__early_stopping_rounds=256)

Then save final_cb to pkl and use it in production as a final model? Or should I pass 256 to 'n_estimators' parameter?