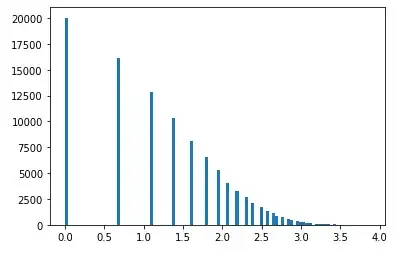

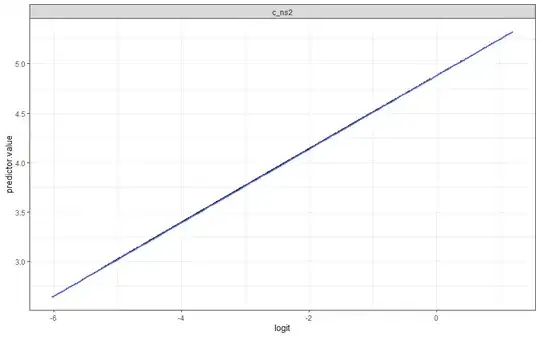

Question: Why is my predictor value (continuous) perfectly correlated with my logit value (when testing logistic regression model assumptions)?

Code:

# linearity in the logit for continuous var: check the linear relationship bw continuous predictor var and the logit of the outcome: inspect scatter plot bw each predictor and logit value

# Select only continuous predictors

glm_h2_a1 <- df_master_aus %>%

dplyr::select(c(c_ns2))

predictors <- colnames(df_master_aus)

# bind the logit and tidying the data for plot

glm_h2_a1 <- glm_h2_a1 %>%

mutate(logit = log(probabilities/(1-probabilities))) %>%

gather(key = "predictors", value = "predictor.value", -logit)

# create the Scatter Plots:

ggplot(glm_h2_a1, aes(logit, predictor.value))+

geom_point(size = 0.5, alpha = 0.5) +

geom_smooth(method = "loess") +

theme_bw() +

facet_wrap(~predictors, scales = "free_y")

Note: More complex model with additional predictors do not all show such linearity: