I am using LSTM to classify the origin of people's names.

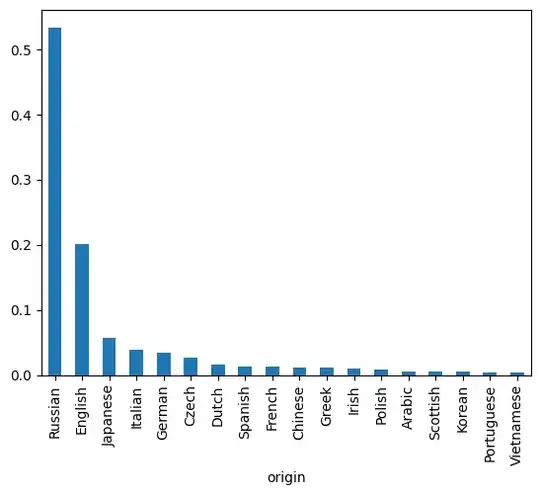

The input data is not balanced over target classes, so I used oversampling to balance it.

Now, I defined a simple LSTM model as follows:

LSTM_with_Embedding(

(embedding): Embedding(32, 10, padding_idx=31)

(lstm): LSTM(10, 32, batch_first=True)

(linear): Linear(in_features=32, out_features=18, bias=True)

)

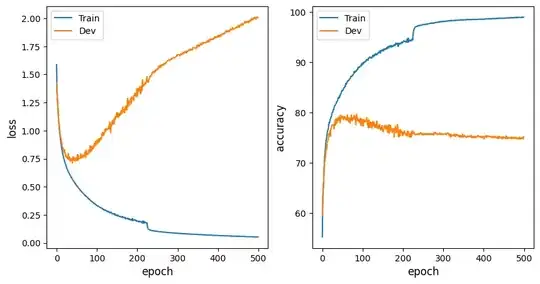

First, I trained this model using the unbalanced data, and got the following results:

Clearly, I am in the overfitting zone.

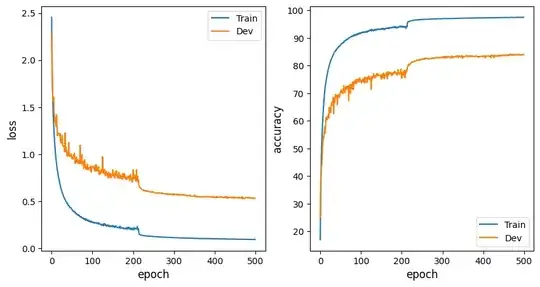

Before I fix this overfitting, I used the same model, but now trained with the balanced training dataset, I kept the test-set unbalanced only to represent the actual world. And the performance is:

How can the performance be so good? Why not overfit now?