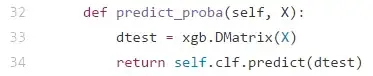

When using the python / sklearn API of xgboost are the probabilities obtained via the predict_proba method "real probabilities" or do I have to use logit:rawand manually calculate the sigmoid function?

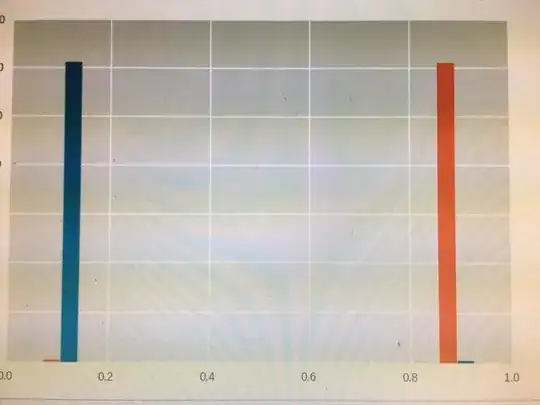

I wanted to experiment with different cutoff points. Currently using binary:lgisticvia the sklearn:XGBClassifier the probabilities returned from the prob_a method rather resemble 2 classes and not a continuous function where changing the cut-off point impacts the final scoring.

Is this the right way to obtain probabilities for experimenting with the cutoff value?