I get some metrics on validation data while training a model , and in my case the they are :

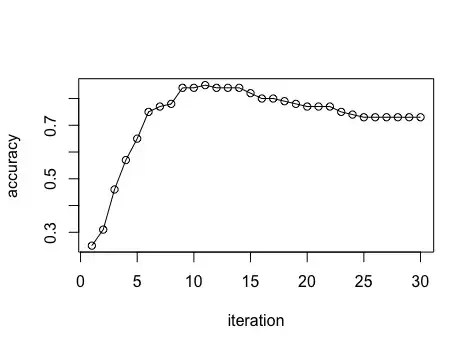

(0.25, 0.31, 0.46, 0.57, 0.65, 0.75, 0.77, 0.78, 0.84, 0.84, 0.85, 0.84, 0.84, 0.84, 0.82, 0.8, 0.8, 0.79, 0.78, 0.77, 0.77, 0.77, 0.75, 0.74, 0.73, 0.73, 0.73, 0.73, 0.73, 0.73)

They can described like this :

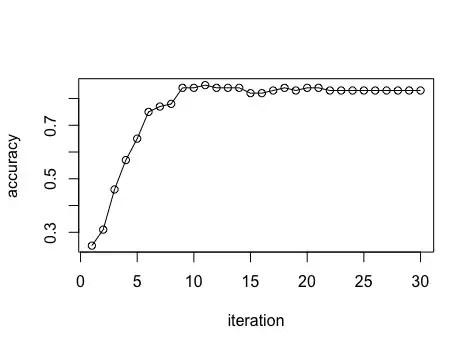

In my view , the ideal result should be like :

Is it a matter of overfitting ?

Unfortunately , I tried few times to change the regular coefficients to avoid overfitting , and adjust learning rate coefficients to slow down , but it was still "convex" .

How can I achieve the ideal result showed above ?

Much appreciated if anyone would give me some constructive tips ?