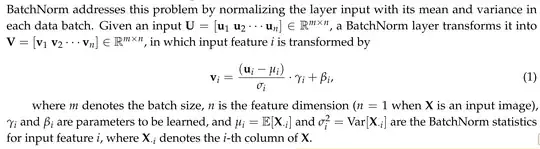

I understand that BatchNorm (Batch Normalization) centers to (mean, std) = (0, 1) and potentially scales (with $ \gamma $) and offsets (with $ \beta $) the data which is input to the layer. BatchNorm follows this formula:

(retrieved from arxiv-id 1502.03167)

(retrieved from arxiv-id 1502.03167)

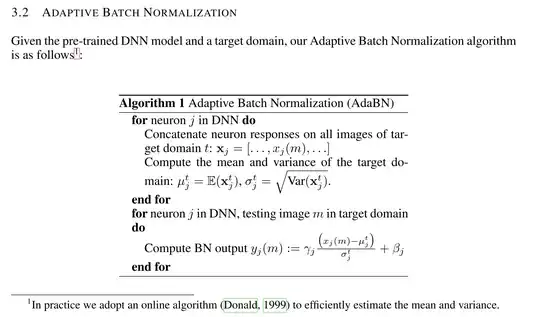

However, when it comes to 'adaptive BatchNorm', I don't understand what the difference is. What is adaptive BatchNorm doing differently? It is described as follows: