I am looking to employ Word2Vec to cluster documents and classify them by topic. However, it seems I need a significant data set [1,2] to achieve such a task.

I have a data set of thousands (not millions) of documents where my total word count is somewhere in the tens of thousands or maybe low hundreds of thousands.

Is there another ML methodology that allows me to attain my goal?

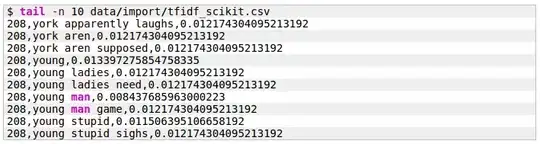

I see how to use TF/IDF to generate words and phrases from a corpus but output as expected is a list of common words and phrases along a flat dimension:

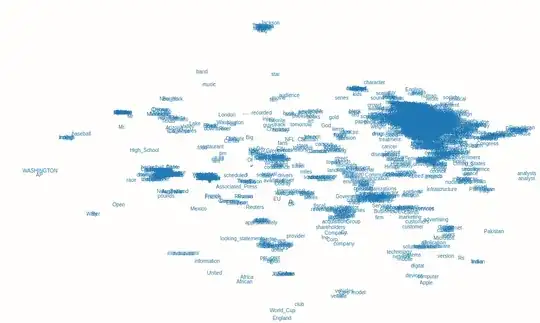

What I am looking for is something more along the lines of a high level cluster of vectors in space: [source]

[source]