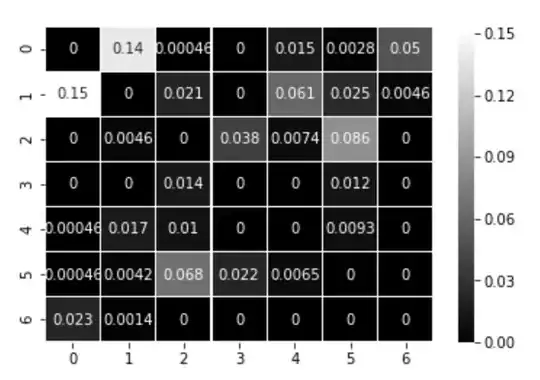

Here is the confusion matrix I got when I was playing with Forest Type Cover Kaggle dataset : Link.

In the matrix, light color and higher numbers represent higher error rates, so as you can see, lots of mis-classification happened between class 1 and 0 .

I wonder what kind of methods I can use to reduce these two error rates though improvements have been made through combing two classifiers, Random Forest and Extra Tree. Will stacking help in this case?

Data can be found on https://www.kaggle.com/c/forest-cover-type-prediction/data