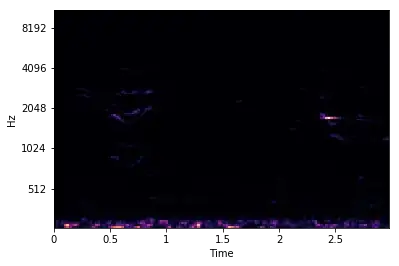

I was reading this paper on environmental noise discrimination using Convolution Neural Networks and wanted to reproduce their results. They convert WAV files into log-scaled mel spectrograms. How do you do this? I am able to convert a WAV file to a mel spectrogram

y, sr = librosa.load('audio/100263-2-0-117.wav',duration=3)

ps = librosa.feature.melspectrogram(y=y, sr=sr)

librosa.display.specshow(ps, y_axis='mel', x_axis='time')

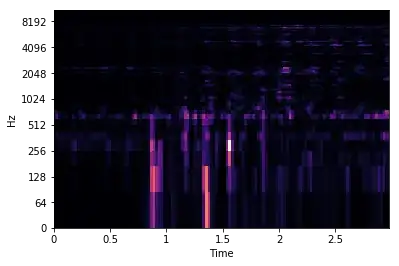

I am also able to display it as a log scaled spectrogram:

librosa.display.specshow(ps, y_axis='log', x_axis='time')

Clearly, they look different, but the actual spectrogram ps is the same. Using librosa, how can I convert this melspectrogram into a log scaled melspectrogram? Furthermore, what is the use of a log scaled spectrogram over the original? Is it just to reduce the variance of the Frequency domain to make it comparable to the time axis, or something else?