Note that the condition $k\leqslant \left ( \frac{R\left \| \bar{\theta} \right \|}{\gamma } \right )^{2}$ makes sense only if $\gamma$ is dependent on $\bar{\theta}$.

Otherwise (i.e. if $\gamma$ and $\bar{\theta}$ are independent), we could choose $\tilde\theta = \frac{\gamma}{R\lVert \bar\theta \rVert}\cdot \bar\theta$, and as Elias demonstrated, $\tilde\theta$ would remain a valid final weights vector, and we would get $$k \leqslant \left ( \frac{R\left \| \tilde{\theta} \right \|}{\gamma } \right )^{2}=\left ( \frac{R\left \| \frac{\gamma}{R\lVert \bar\theta \rVert}\cdot \bar\theta \right \|}{\gamma } \right )^{2}=1$$ which is obviously not always true.

So I guess that wherever you saw the condition $k\leqslant \left ( \frac{R\left \| \bar{\theta} \right \|}{\gamma } \right )^{2}$, the definition of $\gamma$ was something along these lines:

The margin of the hyperplane defined by $\bar \theta$ (a weights vector that also includes the bias) is the maximum $\gamma>0$ such that:

- for every positive sample $x$, it holds that $\bar \theta ^ T x \geqslant \gamma$.

- for every negative sample $x$, it holds that $\bar \theta ^ T x \leqslant -\gamma$.

Now, for any $c>0$, if we choose $\tilde\theta = c\cdot \bar\theta$, then the margin of the hyperplane defined by $\tilde\theta$ is $\tilde \gamma=c \cdot \gamma$, and thus:$$\frac{R\left \| \bar{\theta} \right \|}{\gamma } = \frac{R\left \| \tilde{\theta} \right \|}{\tilde\gamma } $$

Finally, I guess that people (e.g. wikipedia) usually choose $\bar{\theta}$ such that $\left \| \bar{\theta} \right \|=1$ because then we get the condition $k\leqslant \left ( \frac{R}{\gamma } \right )^{2}$, which looks nicer.

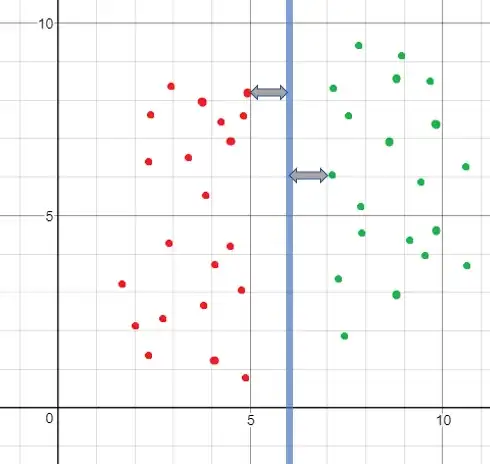

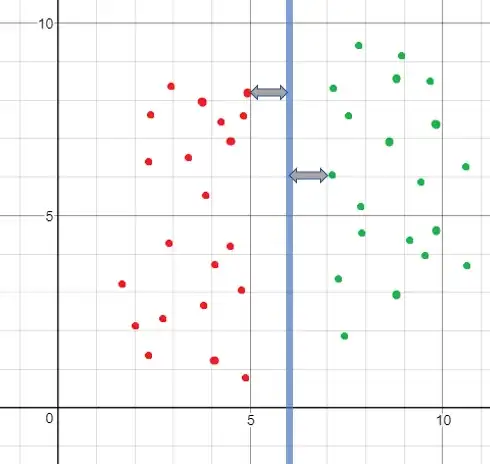

By the way, I was disappointed to find out that choosing $\bar{\theta}$ such that $\left \| \bar{\theta} \right \|=1$ doesn't give the $\gamma$ you would have expected from looking at the examples and the hyperplane in a graph. e.g.:

The hyperplane in the graph is the line $x=6$, and for $\bar{\theta}=

\left(\begin{gathered}1\\

0\\

-6

\end{gathered}

\right)$ we would get $\gamma=1$, which fits the geometric intuition. However, $\left \| \bar{\theta} \right \|\not=1$ in this case (because of the trick of putting the bias inside $\bar{\theta}$).

For $\bar{\theta}=

\left(\begin{gathered}\frac{1}{\sqrt{37}}\\

0\\

-\frac{6}{\sqrt{37}}

\end{gathered}

\right)$ it holds that $\left \| \bar{\theta} \right \|=1$, but $\gamma=\frac{1}{\sqrt{37}}$.