tl;dr: You should project the gradients before feeding them to Adam. You should also do the clipping afterwards in case of non-linear constraints and to avoid accumulation of numerical errors.

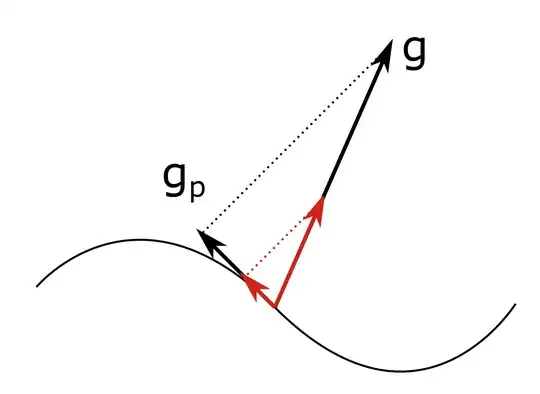

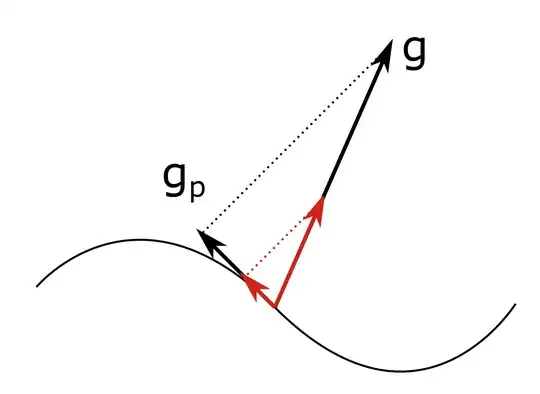

First, assuming normal SGD, for the specific case of (approximately) linear equality constraints, this can be done by

- projecting the gradient to the tangent space

- taking a step into that direction and

- projecting down again to the admissible set (only to correct for errors, numerical ones or due to non-linear constraints):

$$

g_p = \pi_\top(g; x_n) \\

x_{n+1} = \pi_C(x_n - \alpha g_p)

$$

where $g$ is the unprojected gradient, $\pi_\top(\,\cdot\,;x_n)$ projects to the tangent space at the current location $x_n$, $\pi_C(\,\cdot\,)$ projects to the admissible set, and $\alpha$ is the SGD learning rate.

In the general case, you will need to (also see the comments below and this thread)

- identify the search direction by taking an $\epsilon$ step in the true (negative) gradient direction

- project the result down to the admissible set $C$

- rescale appropriately to get an estimate of the projected gradient

- take the actual update step into that direction

- project down again to the admissible set.

The full update (for normal SGD) therefore becomes:

$$

\widehat{g}_p = {\textstyle\frac{1}{\epsilon}}(x_n - \pi_C(x_n - \epsilon g)) \qquad [\text{steps 1–3}]\\

x_{n+1} = \pi_C(x_n - \alpha \widehat{g}_p)~, \qquad [\text{steps 4 and 5}]

$$

where $g$ is the original gradient, $0<\epsilon\ll1$ is a small constant, $\widehat{g}_p$ is the estimate of the projected gradient, $\alpha$ is the SGD learning rate, and $\pi_C$ projects to the admissible set $C$.

Note that taking a small step $\epsilon$ computes a numerical approximation of the tangent space, which will work in a broader range of cases (e.g. for non-differentiable constraints). If you can, it will always be better (more exact and numerically stable) to analytically project the gradient. But this will obviously depend on your specific problem.

For Adam, you would use the projected gradient $g_p$ or its estimate $\widehat{g}_p$ and feed it to Adam. Adam then does its thing (estimating first/second moments, rescale/normalise gradients) and do a preliminary update step

$$

\widetilde{x}_{n+1} = x_n - \alpha \widetilde{g}_p~,

$$

where $\widetilde{g}_p$ is Adam's modified version of the projected gradient (based on the current and past gradients you fed it), and $\alpha$ is its learning rate. Last thing to do is the final projection:

$$

x_n = \pi_C(\widetilde{x}_{n+1})~.

$$

Background: The problem is not the exponential moving average but Adam's gradient normalisation based on the second moment. Essentially, the average gradient is rescaled by its average magnitude, which (for unconstraint problems) results in nice behaviour: If the magnitude is consistently small, gradients are scaled up to speed up convergence; if its huge, they are scaled down to avoid overshooting; if gradients are noisy around zero (small average gradient but large average magnitude), they are also scaled down, allowing for a precise convergence.

However, for projected gradients, this is a problem. The magnitude of the original gradient may be much larger than the magnitude of the projected gradient (especially close to an optimum on one of the constraints). If you do not project the gradients before feeding them the Adam, it will happily rescale everything with the magnitude of the original gradient, which basically means that things cannot ever converge. However, if you feed it the projected gradient, it will "see" its small magnitude, rescale it appropriately and things should be fine.

Edit: For linear equality constraints, it does not make a difference whether you first go into the unprojected gradient direction ($g$ below) and then project down, or you first project the gradient ($g_p$ below) and then take a step into that direction. For non-linear equality constraints, you would need to perform a second projection in the second case to correct for the errors due to the curvature.

For Adam, however, it makes a big difference because in the first case it sees a much larger gradient than in the second case. Adam tries to always make the effective gradient unit size, so it will scale down excessively in the first case.