The objective is to build a model that is capable of identifying information on receipts and invoices that can look completely different.

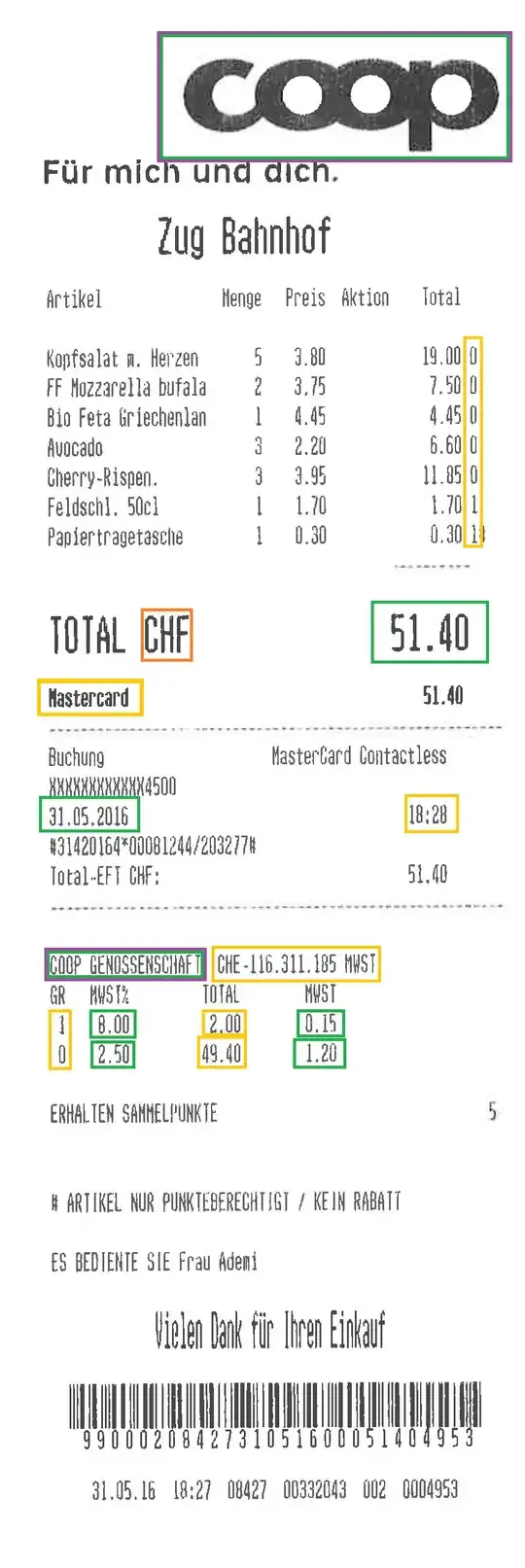

I've had a discussion with my brother about the right approach. I have attached an example, here the original and below is the important information in boxes:

The green boxes are the must-have information. The one in purple and green indicates that we need either or. The orange information would be a nice-to-have, but not necessarily required. Some of the boxes have context and inter linkage.

From a data set point of view, we have a sample size of 1,000 receipts and all of them have the necessary information extracted. We could increase the sample size further if that was required.

The approach that I would have chosen:

Treat everyone of the images of the receipts like a game and let the model figure out itself how to arrive at the right conclusion. This will most likely be very computing intensive but I feel like it will be more robust when dealing with new image types.

The approach my brother has suggested:

Basically using the boxes that I've provided and let me model learn from that. The model would then learn to identify the important areas on a receipt or invoice and would go from there. He compared the model to one that would identify license plates.

Edit:

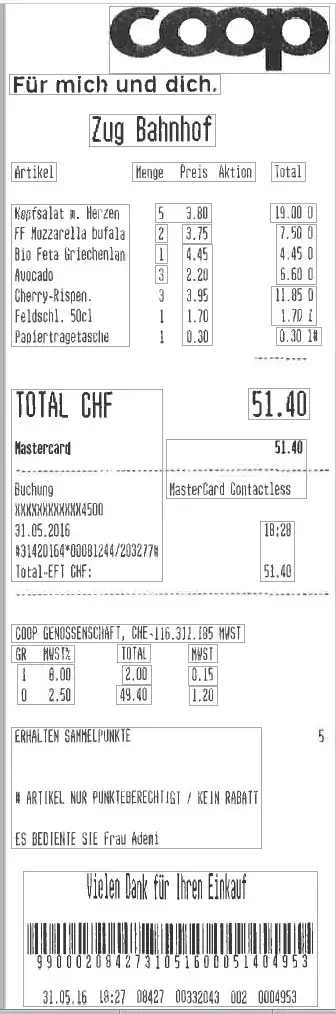

Just to reiterate why I think OCR is only part of the solution but not the solution itself. Here is the Adobe Acrobat OCR result:

Perfect if you ask me. It just doesn't help me figure out what values to use and which to ignore. I don't want to do this manually. I want the model(s) to return for me:

- Total amount

- Sales Tax & Amount

- Creditor (i.e. the company and ideally the tax identifier CHE-xxxxx MWST

- Date and ideally time

- Payment method

Does this make more sense now? I just don't see how OCR gets me there. It will only be the method to extract the values.