I want to train YOLO3 for a custom dataset that has raw labels in JSON format. Each bounding box in JSON is specified as [x1, y1, x2, y2].

So far, I have converted [x1, y1, x2, y2] to [cx, cy, pw, ph], that is, center x, center y of the bounding box, scaled to image width and height; pw, ph are the ratios of bounding box's width and height relative to the image's width and height. But I don't think that's complete (or even right).

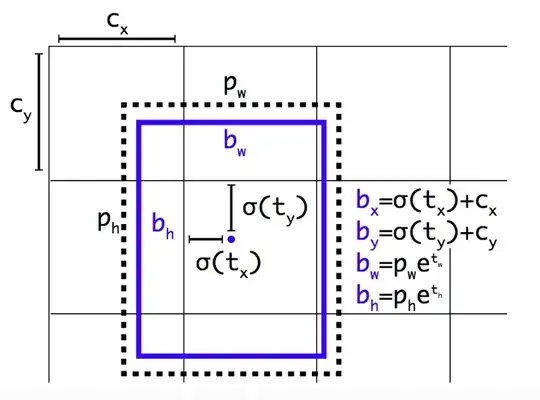

As far as I understood, YOLO3 assigns N anchor boxes to each grid cell (image is divided into SxS grid cells) and thus, the prediction of a bounding box is relative to a given anchor box from a grid cell (that one anchor box that has the highest IOU with the ground truth). The formulas are below:

Therefore, how should I prepare ground truths so that YOLO3 can understand them? Do I have to, somehow, reverse those formulas? Also how to account for different number of scales and different number of anchor boxes?

For a concrete example: Suppose I have a 416 x 416 image and a configuration of 13 x 13 grid cells. The ground truth bounding box (from the dataset) is [x1=100, y1=100, x2=200, y2=200], class = c. What will be the converted values for YOLO3?

L.E.: Say we have 2 classes [car, person] and 2 anchors (1 wide, 1 tall).

Would the output be a tensor of shape 13 x 13 x (2*(5+2)) where most of the values (that vector of shape 2*(5+2)) for the grid cells are 0 except for one particular cell (the one in which the center of the ground truth bounding box falls)?

In this case, for that cell (say c[i,j]), suppose the largest IOU is given for anchor 2 and that the ground truth class is person. This means that c[i,j,:7] (anchor 1 prediction) will be ignored and c[i,j,7:] (anchor 2 prediction) will be [bx, by, bw, bh, conf, 0, 1].

Therefore, how should the ground truth for the person's bounding box should be encoded? Should it be an offset from a particular anchor of a grid cell? This is what it's still unclear for me.

Thank you!