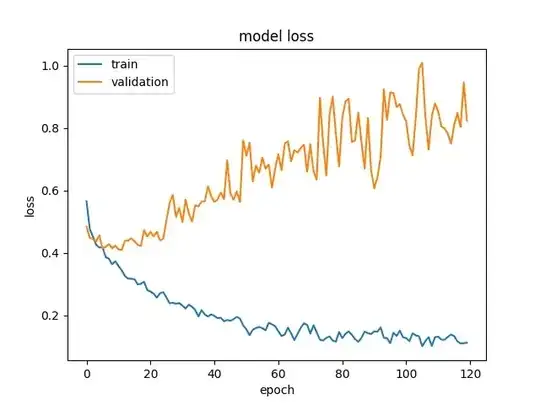

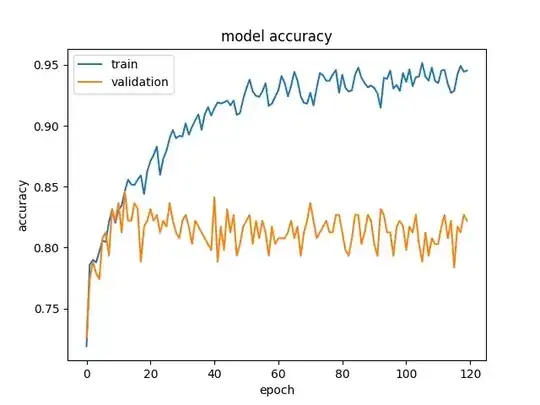

Your model is indeed overfitting. There can be a lot of reasons why it could be happening - How many images are you training with?, Aren't you using Regularization, data augmentation using random crops or flips, Dropout to prevent overfitting?

So my question is at what loss (val_loss) can we consider the model

trained?

We can generally stop training when Validation loss doesn't decrease anymore. This is generally done by defining a variable eg: n = 5 and checking if the validation loss has decreased after running n epochs. If you're solving a well-known problem(MNIST, Fashion-MNIST, CIFAR-10, etc), you can check the best score/loss achievable by the model and tweak your model(add or remove layers, prevent overfitting, etc) to achieve the score.

And is there a problem if the images that I feed the VGG-16 are

RGB/255?

You should preprocess the images for faster training and also subtract the mean RGB pixel values of images used to train pretrained network(if the pretrained net is trained using imagenet data,then subtract imagenet dataset's mean RGB pixel values from your input images) from your input images. Here you can read keras preprocessing_input

method.

And if someone could explain how high validation loss (even if validation accuracy is high) could impact the model?

Validation loss measures how generalizable your model is to unseen data. If your training loss and validation loss don't improve during training, then you are underfitting. If you're training loss is low but your validation loss is stagnant or increasing then you're overfitting, which means that your model is learning unwanted noise or patterns which help it learn or memorize training data but are not generalizable on an unseen validation set.

Here is a link explaining data preprocessing, augmentation, transfer learning using pretrained net.