I am trying to understand the purpose of Dueling DQN.

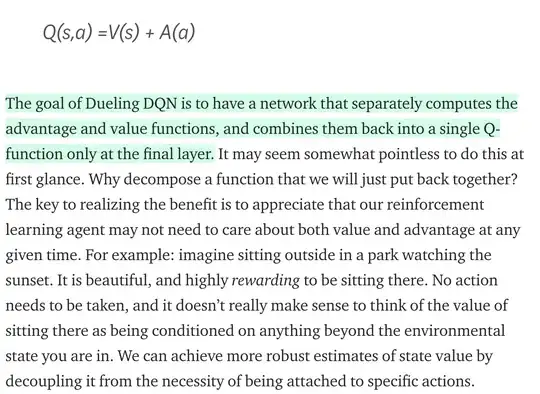

According to this blogpost:

our reinforcement learning agent may not need to care about both value and advantage at any given time - this seems to be what I can't understand.

Let's assume we are in state $S_t$ and we select an action which has the highest score. This is the promised total reward that we will get in the future if we take the action.

Notice, we don't yet know the V or A of the future state $S_{t+1}$ (where we will end up after taking this best action), so decoupling V and A in any state, including S_{t+1} seems unnecessary. Additionally, once we do get to work with them we still seem to recombine them during $S_{t+1}$ into a single Q-value, just as was noted in the blog post.

So, to complete my thought: V and A seem to be a "hidden intermediate step", that still gets combined into Q, so we never know it's even there. Even if network somehow benefits from one or the other, how does it help if both streams still end up as Q?

A slightly unrenated thought, 'V' is the score of the current state only. 'A' is the total future expected Advantage, for a particular action, right?

Can someone provide a different example to the sunset?

Edit after answer was accepted:

Found a friendly explanation about this architecture here.

Also, if someone struggles to understand what V, Q and A is, read this answer, and my comment under it.