I need to quantify the importance of the features in my model. However, when I use XGBoost to do this, I get completely different results depending on whether I use the variable importance plot or the feature importances.

For example, if I use model.feature_importances_ versus xgb.plot_importance(model) I get values that do not align. Presumably the feature importance plot uses the feature importances, bu the numpy array feature_importances do not directly correspond to the indexes that are returned from the plot_importance function.

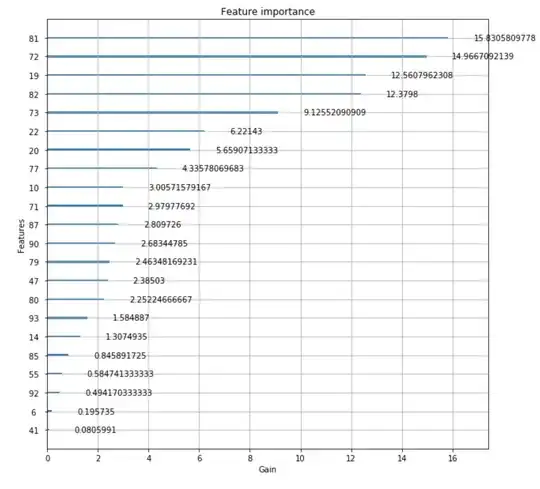

Here is what the plot looks like:

But this is the output of model.feature_importances_ gives entirely different values:

array([ 0. , 0. , 0. , 0. , 0. ,

0. , 0.00568182, 0. , 0. , 0. ,

0.13636364, 0. , 0. , 0. , 0.01136364,

0. , 0. , 0. , 0. , 0.07386363,

0.03409091, 0. , 0.00568182, 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.00568182, 0. , 0. , 0. ,

0. , 0. , 0.00568182, 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.01704546, 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.05681818, 0.15909091, 0.0625 , 0. ,

0. , 0. , 0.10227273, 0. , 0.07386363,

0.01704546, 0.05113636, 0.00568182, 0. , 0. ,

0.02272727, 0. , 0.01136364, 0. , 0. ,

0.11363637, 0. , 0.01704546, 0.01136364, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. ], dtype=float32)

If I just try to grab Feature 81 (model.feature_importances_[81]), I get:0.051136363. However model.feature_importances_.argmax() returns 72.

So the values do not correspond to each other and I am unsure about what to make of this.

Does anyone know why these values are not concordant?