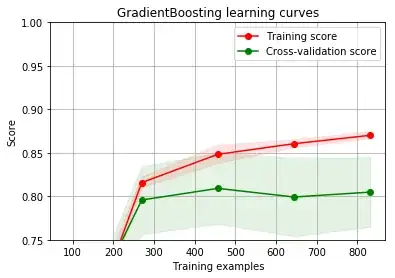

As you can see, GradientBoostingClassifier overfit with more training example. These are my parameter for the model:

{'learning_rate': 0.1, 'loss': 'deviance', 'max_depth': 6, 'max_features': 0.3, 'min_samples_leaf': 80, 'n_estimators': 300}

What should I to make my model better or stop training at 350?