I am currently working on a problem of multi-class classification on testing logs data.

Basically, I have the context data from tests' execution saved, and want to automate the analysis of the failed tests. The two targets I have are the page (which corresponds to the working team) responsible for the error, and the type of error.

So, for this matter I got the data at a time t and worked on it locally in order to get the best results possible.

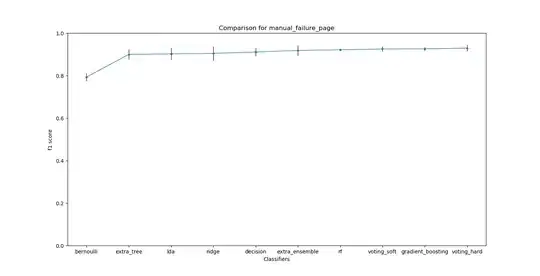

I trained a few models, optimized them through hyper-parameter optimization with grid search, and got good results.

The f1_scores are around 90+% for the target page and 80+% for the target type.

These results seem good so I was working on ensembling methods to then get even better results from the combinations of my different models.

On the following figures, you can see the f1 scores and variances of different models for the target page.

But as I was working on ways to do ensembling, I got the idea of getting the latest testing data (remember I was working with the same data from the time t).

I went from having only 2162 samples to 4367, so a bit more than double the amount of data.

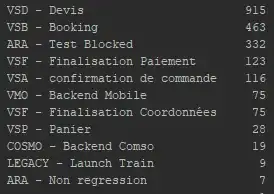

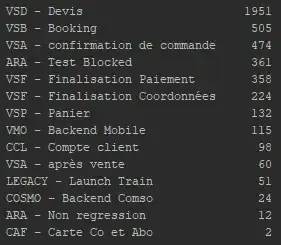

This gives my data a distribution different from before (on the following figures we can see the number of values per class for the target page).

Above, we can see that there are 11 highly unbalanced classes.

And on the second figure, we can see that we have now 14 classes, also very unbalanced.

(The differences with the new data are also true for the target type). It resulted in my models loosing between 6 and 10% of f1_score.

So, my questions are the following :

- How do I work around data in this case in order for the models to work well with new data even when the distribution might change ? Will I need to check the evolution of the scores and when it goes too low, redo the parameter optimization ?

- I am for now working with each target independently. Should I try multi-labeling between both targets ? If so, do you have any recommendation on algorithms or ways to go around it ?

- Any suggestion on how to improve the work, maybe different models to try, or ensembling methods would be greatly appreciated ☺

Thanks for reading this long post !