This question also asked on another StackExchange with Bounty. Question here.

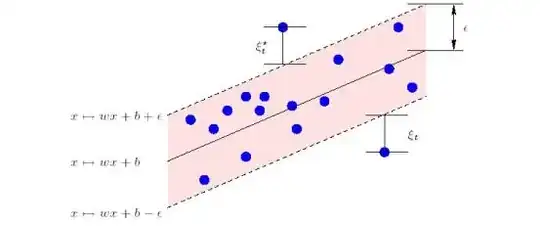

I'm working with SVR, and using this resource. Erverything is super clear, with epsilon intensive loss function (from figure). Prediction comes with tube, to cover most training sample, and generalize bounds, using support vectors.

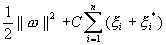

Then we have this explanation. This can be described by introducing (non-negative) slack variables , to measure the deviation of training samples outside -insensitive zone. I understand this error, outside tube, but don't know, how we can use this in optimization. Could somebody explain this?

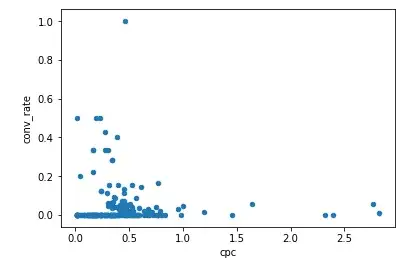

In local source. I'm trying to achieve very simple optimization solution, without libraries. This what I have for loss function.

import numpy as np

# Kernel func, linear by default

def hypothesis(x, weight, k=None):

k = k if k else lambda z : z

k_x = np.vectorize(k)(x)

return np.dot(k_x, np.transpose(weight))

.......

import math

def boundary_loss(x, y, weight, epsilon):

prediction = hypothesis(x, weight)

scatter = np.absolute(

np.transpose(y) - prediction)

bound = lambda z: z \

if z >= epsilon else 0

return np.sum(np.vectorize(bound)(scatter))