The answer will depend on some things such as your hardware and the image you process. Additional, we should distinguish if you are talking about a single run through the network in training mode or in inference mode. In the former, additional parameters are pre-computed and cached as well as several layers, such as dropout, being used, which are simply left out during inference. I will assume you want to simply produce a single prediction for a single image, so we are talking about inference time.

Factors

The basic correlation will be:

- more parameters (i.e. learnable weights, bigger network) - slower than a model with less parameters

- more recurrent units - slower than a convolutional network, which is slower than a full-connected network1

- complicated activation functions - slower than simple ones, such as ReLU

- deeper networks - slower than shallow networks (with same number of parameters) as less run in parallel on a GPU

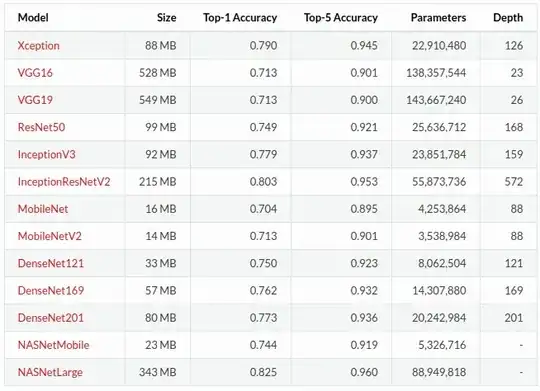

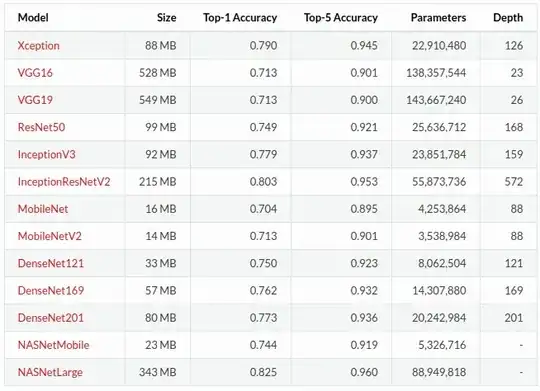

Having listed a few factors in the final inference time required (time taken to produce one forward run through the network), I would guess that MobileNetV2 is probably among the fastest pre-trained model (available in Keras). We can see from the following table that this network has a small memory footprint of only 14 megabytes with ~3.5 million parameters. Compare that to your VGG test, with its ~138 million... 40 times more! In addition, the main workhorse layer of MobileNetV2 is a conv layer - they are essentially clever and smaller versions of residual networks.

Extra considerations

The reason I included the whole table above was to highlight that with small memory footprints and fast inference times, comes a cost: low accuracies!

If you compute the ratios of top-5 accuracy versus number of parameters (and generally versus memory), you might find a nice balance between inference time and performance.

1 Have a look at this comparison of CNNs with Recurrent modules