When I am explaining concept of linear regression to one of my peers, I got stuck in answer this question. Why don`t we use Manhattan distance instead of euclidean distance in linear regression? Can anyone give intuition behind this?

-

Least squares is easier to minimize than least absolute deviation (LAD) because the latter is non-differetial. Nowadays, LAD aka median regression is also quite frequently used. – Michael M Dec 25 '18 at 15:04

3 Answers

The Euclidean distance is the most commonly used measure of distance in the context of least squares regression, because it is the distance measure that is induced by the Euclidean norm. In other words, the Euclidean distance is the natural distance measure to use in least squares regression, because it is the distance measure that is consistent with the assumptions of the least squares criterion.

The use of the Euclidean distance in linear regression is motivated by the fact that the least squares criterion is based on the assumption that the error between the observed values and the predicted values is normally distributed. Under this assumption, the sum of squared errors (SSE) is the appropriate measure of the goodness of fit of the model, and the model parameters are chosen to minimize the SSE.

The Manhattan distance, on the other hand, is a different distance measure that is not induced by any vector norm. It is defined as the sum of the absolute differences between the coordinates of two points, and it is not generally used in the context of least squares regression.

One reason why the Manhattan distance is not commonly used in linear regression is that it does not have the nice mathematical properties that the Euclidean distance has. For example, the Euclidean distance is a continuous function, while the Manhattan distance is not. This means that the Manhattan distance cannot be easily differentiated, and it is not as amenable to mathematical analysis as the Euclidean distance.

In addition, the Manhattan distance does not have the same statistical properties as the Euclidean distance.

- 3,520

- 11

- 29

- 49

Linear regression does not typically use Euclidean distance. The most common loss for linear regression is the least-squares error. It might be useful to examine this idea visually.

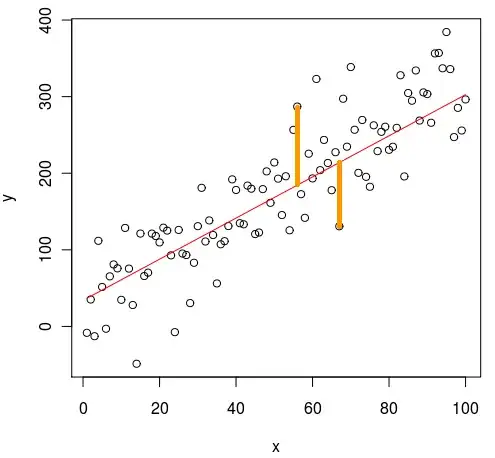

Here is least squared error:

The orange lines show examples of residuals, the delta between predicted and observed for the target variable. Each residual is squared, all of the squares are summed, and then divided by the count.

Here is Euclidean distance:

Euclidean distance is different, the orange lines show it as the perpendicular distance of the point to the nearest point on the line.

- 20,142

- 2

- 25

- 102

The main reason for that may be typically use Euclidean metric; Manhattan may be appropriate if different dimensions are not comparable.. While using regression-based methods you may have noticed that you usually have features of real values. You usually normalise the features and feed them to your model. The act of normalising features somehow means your features are comparable. In cases where you have categorical features, you may want to use decision trees, but I've never seen people have interest in Manhattan distance but based on answers [2, 3] there are some use cases for Manhattan too. You can also consider that they are comparable.

- 13,868

- 9

- 55

- 98