I need some suggestions to improve my model accuracy.

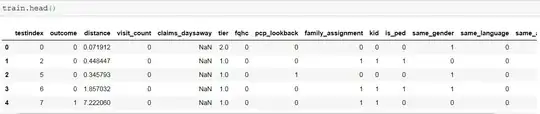

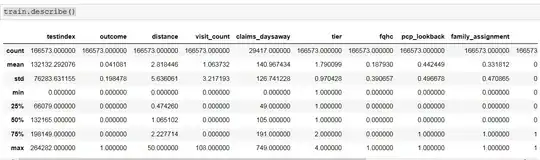

The training data shape is : (166573, 14)

It has all int and float columns. I have dropped claims_daysaway column as most of values are NaN and replaced Nan value with mean for tier column.

X_train = train.drop(['outcome','testindex','claims_daysaway'], axis=1)

y_train = train['outcome']

As the values were on different scale, I have used StandScaler() to standardize values.

This dataset is highly imbalanced.

train['outcome'].value_counts()

0 159730

1 6843

I tried SMOTE for oversampling.

from imblearn.over_sampling import SMOTE

smt = SMOTE()

X_train, y_train = smt.fit_sample(X_train, y_train)

pd.value_counts(pd.Series(y_train))

1 159730

0 159730

Lastly, I fit model using XGBClassifier but when tried this model on testdata and submitted it, it gives only 60% roc_auc_score.

Please suggest how to handle imbalanced dataset better.