I have a heavy imbalanced dataset with a classification problem. I try to plot the Calibration Curve from the sklearn.calibration package. In specific, I try the following models:

rft = RandomForestClassifier(n_estimators=1000)

svc = SVC(probability = True, gamma = "auto")

gnb = MultinomialNB(alpha=0.5)

xgb = xgboost.XGBClassifier(n_estimators=1000, learning_rate=0.08, gamma=0, subsample=0.75, colsample_bytree=1, max_depth=7)

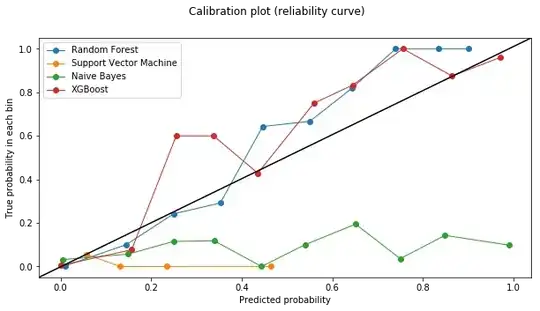

The calibration curve is the following

As you can see on the plot, Random Forest and XGBoost are close to the perfectly calibrated model. However, Naive Bayes and SVM perform terribly.

How can I explain/describe the behaviour of those two models?