From ISLR:

... we consider all predictors $X_1$, . . . , $X_p$, and all

possible values of the cutpoint s for each of the predictors, and then

choose the predictor and cutpoint such that the resulting tree has the

lowest RSS ...

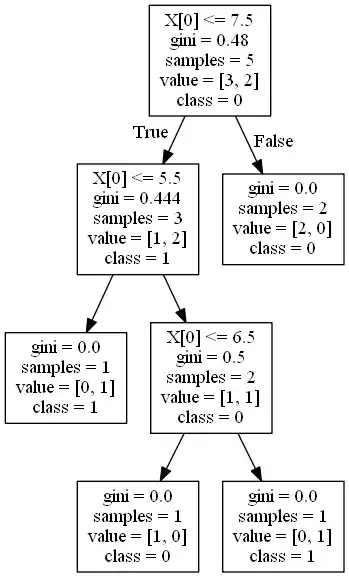

Since it's a classification problem, the best split is chosen by maximizing the Gini Gain, which is calculated by subtracting the weighted impurities of the branches from the original Gini impurity.

For c total classes with the probability of picking a datapoint with class, i is p(i), then the Gini Impurity is calculated as:

\begin{equation}

G = \sum_{i=1}^{c} [ p(i) * (1 − p(i)) ]

\end{equation}

1. Gini Impurity

Here, c = 2 , P(0) = 3/5 and P(1) = 2/5

G = [P(0) * (1 - P(0))] + [P(1) * (1 - P(1))]

G = [3/5 * (1 - 3/5)] + [2/5 * (1 - 2/5)] = 12/25

G = 0.48

2. Gini Gain

Now, let's determine the quality of each split by weighting the impurity of each branch. This value - Gini Gain is used to picking the best split in a decision tree.

In layman terms, Gini Gain = original Gini impurity - weighted Gini impurities So, higher the Gini Gain is better the split.

Split at 6.5:

Gini Impurity G_left = [1/2 * (1 - 1/2)] + [1/2 * (1 - 1/2)] = 0.50

Gini Impurity G_right = [2/3 * (1 - 2/3)] + [1/3 * (1 - 1/3)] = 0.44

Weighted Gini = (1/5 * .50) + (4/5 * 0.44) = 0.45

Gini Gain = 0.48 - 0.45 = 0.03

Split at 7.5:

Gini Impurity G_left = [2/3 * (1 - 2/3)] + [1/3 * (1 - 1/3)] = 0.444

Gini Impurity G_right = [2/2 * (1 - 2/2)] = 0

Weighted Gini = (3/5 * 0.444) + (2/5 * 0) = 0.27

Gini Gain = 0.48 - 0.27 = 0.21

Split at 8.5:

Gini Impurity G_left = [2/4 * (1 - 2/4)] + [2/4 * (1 - 2/4)] = 0.500

Gini Impurity G_right$ = [1/1 * (1 - 1/1)] = 0

Weighted Gini = (4/5 * 0.5) + (1/5 * 0) = 0.40

Gini Gain = 0.48 - 0.40 = 0.08

So, the best split will be chosen 7.5 because it has the highest Gini Gain.