I have trained an RL agent using DQN algorithm. After 20000 episodes my rewards are converged. Now when I test this agent, the agent is always taking the same action , irrespective of state. I find this very weird. Can someone help me with this. Is there a reason, anyone can think of why is the agent behaving this way?

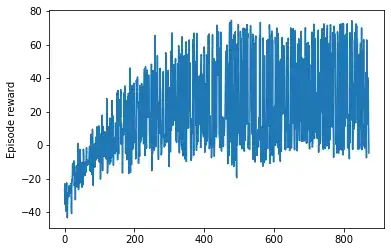

Reward plot

When I test the agent

state = env.reset()

print('State: ', state)

state_encod = np.reshape(state, [1, state_size])

q_values = model.predict(state_encod)

action_key = np.argmax(q_values)

print(action_key)

print(index_to_action_mapping[action_key])

print(q_values[0][0])

print(q_values[0][action_key])

q_values_plotting = []

for i in range(0,action_size):

q_values_plotting.append(q_values[0][i])

plt.plot(np.arange(0,action_size),q_values_plotting)

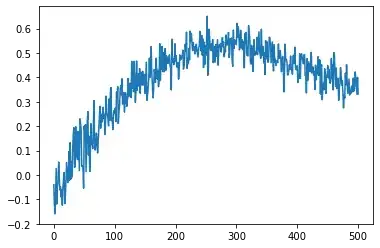

Every time it gives the same q_values plot, even though state initialized is different every time.Below is the q_Value plot.

Testing:

code

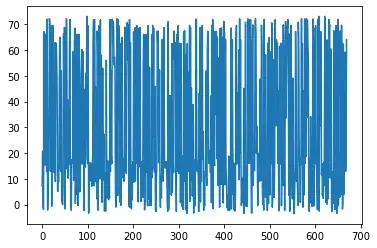

test_rewards = []

for episode in range(1000):

terminal_state = False

state = env.reset()

episode_reward = 0

while terminal_state == False:

print('State: ', state)

state_encod = np.reshape(state, [1, state_size])

q_values = model.predict(state_encod)

action_key = np.argmax(q_values)

action = index_to_action_mapping[action_key]

print('Action: ', action)

next_state, reward, terminal_state = env.step(state, action)

print('Next_state: ', next_state)

print('Reward: ', reward)

print('Terminal_state: ', terminal_state, '\n')

print('----------------------------')

episode_reward += reward

state = deepcopy(next_state)

print('Episode Reward' + str(episode_reward))

test_rewards.append(episode_reward)

plt.plot(test_rewards)

Thanks.