I am studying the policy gradient through the website: https://towardsdatascience.com/understanding-actor-critic-methods-931b97b6df3f

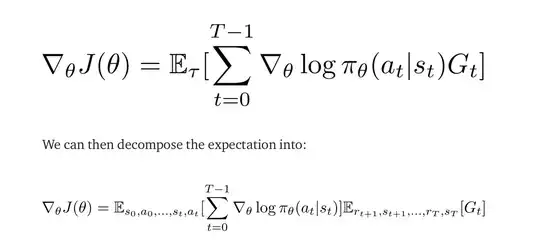

Couldn't figure out how the first equation becomes the second equation?

In the second equation, why the first Expectation value have only s_0, a_0, s_1, a_1 ... s_t, a_t but no reward involved? Also, the second Expectation Value has only r_t+1, s_t+1, ... r_T, s_T, but no action involved? Could anyone please share the thoughts/intuition behind this? Thank you!