I'm using pre-trained DistilBERT model from Huggingface with custom classification head, which is almost the same as in the reference implementation:

class PretrainedTransformer(nn.Module):

def __init__(

self, target_classes):

super().__init__()

base_model_output_shape=768

self.base_model = DistilBertModel.from_pretrained("distilbert-base-uncased")

self.classifier = nn.Sequential(

nn.Linear(base_model_output_shape, out_features=base_model_output_shape),

nn.ReLU(),

nn.Dropout(0.2),

nn.Linear(base_model_output_shape, out_features=target_classes),

)

for layer in self.classifier:

if isinstance(layer, nn.Linear):

layer.weight.data.normal_(mean=0.0, std=0.02)

if layer.bias is not None:

layer.bias.data.zero_()

def forward(self, input_, y=None):

X, length, attention_mask = input_

base_output = self.base_model(X, attention_mask=attention_mask)[0]

base_model_last_layer = base_output[:, 0]

cls = self.classifier(base_model_last_layer)

return cls

During training, I use linear LR warmup schedule with max LR=5-e5 and cross entropy loss.

In general, the model is able to learn on my dataset and reach high precision/recall metrics.

My question is:

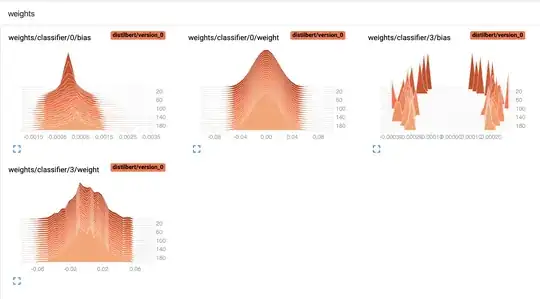

Should weights distributions and biases in classification layers change more during training? It seems like the weights almost do not change at all, even when I do not initialize them as in the code (to mean=0.0 and std=0.02). Is this an indication that something is wrong with my model or it's just because the layers I've added are redundant and model does not learn nothing new?