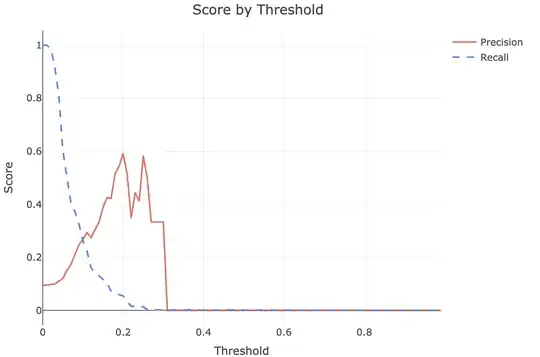

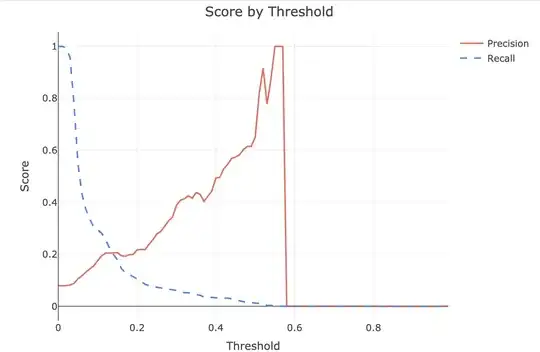

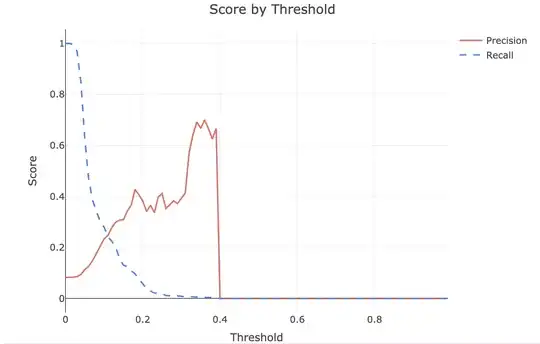

My problem is the following: I have a binary Logistic Regression model with a very imbalanced dataset that outputs the percentage of the prediction. As can be seen in the images, as the threshold is increased there's a certain point it stops predicting. I am researching calibration techniques to try and make it work better but I thought maybe I could get some direction here.

I've tried giving weights to the classes but it didn't seem to get much better.

Is it a probability calibration problem?

The three graphs below are shown in no particular order.

Thanks in advance.