I am training a multi task model using VGG16.

Datase:

Dataset contain 11K images. There are two tasks:

The dataset is imbalanced,

1) PFR classification: 10 classes

0 --- 5776

10-12 --- 1066

6-9 --- 1027

4-5 --- 680

1-3 --- 518

30-40 --- 471

20-25 --- 430

13-15 --- 304

60+ --- 267

41-56 --- 160

2) FT classification: 5 classes

1 --- 4979

2 --- 2322

3 --- 1932

4 --- 1051

5 --- 415

Initially, i trained individual CNN model for both classifications. Without loss_weights, the accuracy for PFR task was 86% in individual CNN model and for FT task, the accuracy was 92% in individual model.

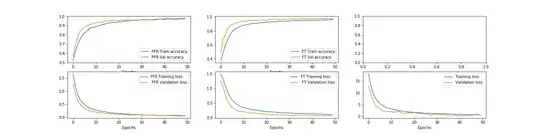

Then I tried using multi task learning and it produced following results:

The validation accuracy is greater than the training accuracy through out all the epochs and the validation loss is lower than training loss.

During test, the precision and recall for each class is between 0.80 - 0.10.

Is the validation accuracy higher because the model has dropout layers?

The model code is:

baseModel = VGG16(weights="imagenet", include_top=False,input_tensor=Input(shape=(img_size, img_size, 3)))

flatLayer = baseModel.output

sharedLayer = Flatten(name="flatten")(flatLayer)

sharedLayer = Dense(1024,name="Shared")(sharedLayer)

sharedLayer = Dropout(0.5)(sharedLayer)

task1 = Dense(512, activation="relu")(sharedLayer)

task1 = Dense(10, activation="softmax",name='PFR')(task1)

task2 = Dense(512, activation="relu")(sharedLayer)

task2 = Dense(5, activation="softmax",name='FT')(task2)

model3 = Model(inputs=baseModel.input, outputs=[task1,task2])