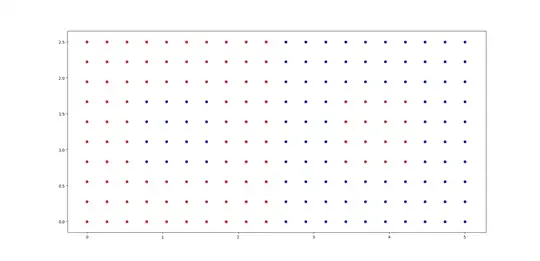

I was exploring the AdaBoost classifier in sklearn. This is the plot of the dataset. (X,Y are the predictor columns and the color is the label)

As you can see there are exactly 16 points in either side that can be easily miss-classified. TO check how the performance increases with increasing n_estimators I used this code

for i in range(1,21):

clf = AdaBoostClassifier(DecisionTreeClassifier(max_depth=2),

n_estimators=i,algorithm='SAMME')

clf.fit(X_df,y)

y_pred = clf.predict(X_df)

from sklearn.metrics import confusion_matrix as CM

#CM(y_pred,y_pred1)

#CM(y,y_pred1)

print(i,CM(y,y_pred))

Upto n_estimators = 13 all the 32 points were miss classified. The confusion matrix is

[[84 16]

[16 84]]

( with the exception of n_estimators=8. Here all red points were properly classified)

[[100 0]

[16 84]]

From 13 onwards it began flipping in a weird way. The confusion matrices are given in order.

[[84 16] | [[ 84 16] | [[84 16] | [[ 84 16] | [[ 84 16] | [[100 0] | [[100 0] | [[84 16]

[16 84]] | [ 0 100]] | [16 84]] | [ 0 100]] | [ 0 100]] | [ 16 84]] | [ 0 100]] | [16 84]]

Where apparently n_estimators=19 is giving better performance than n_estimators = 20.

Can someone please explain what is happening and what is causing this behavior?