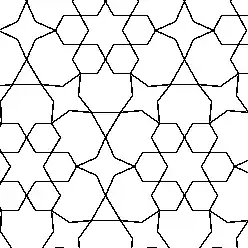

My goal is to create simple geometric line drawings in pure black and white. I do not need gray tones. Something like this (example of training image):

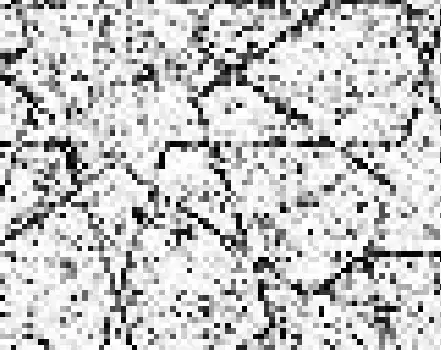

But using that GAN it produces gray tone images. For example, here is some detail from a generated image.

I used this Pytorch based Vanilla GAN as the base for what I am trying to do. I suspect my GAN is doing far too much work calculating all those floats. I'm pretty sure it is normalized to use numbers between -1 and 1 inside the nn? I have read it is a bad idea to try to using 0 and 1 due to problems with tanh activation layer. So any other ideas? Here is the code for my discriminator and generator.

image_size=248

batch_size = 10

n_noise = 100

class Discriminator(nn.Module):

"""

Simple Discriminator w/ MLP

"""

def __init__(self, input_size=image_size ** 2, num_classes=1):

super(Discriminator, self).__init__()

self.layer = nn.Sequential(

nn.Linear(input_size, 512),

nn.LeakyReLU(0.2),

nn.Linear(512, 256),

nn.LeakyReLU(0.2),

nn.Linear(256, num_classes),

nn.Sigmoid(),

)

def forward(self, x):

y_ = x.view(x.size(0), -1)

y_ = self.layer(y_)

return y_

Generator:

class Generator(nn.Module):

"""

Simple Generator w/ MLP

"""

def __init__(self, input_size=batch_size, num_classes=image_size ** 2):

super(Generator, self).__init__()

self.layer = nn.Sequential(

nn.Linear(input_size, 128),

nn.LeakyReLU(0.2),

nn.Linear(128, 256),

nn.BatchNorm1d(256),

nn.LeakyReLU(0.2),

nn.Linear(256, 512),

nn.BatchNorm1d(512),

nn.LeakyReLU(0.2),

nn.Linear(512, 1024),

nn.BatchNorm1d(1024),

nn.LeakyReLU(0.2),

nn.Linear(1024, num_classes),

nn.Tanh()

)

def forward(self, x):

y_ = self.layer(x)

y_ = y_.view(x.size(0), 1, image_size, image_size)

return y_

What I have so far pretty much consumes all the available memory I have so simplifying it and / or speeding it up would both be a plus. My input images are 248px by 248px. If I go any smaller than that, they are no longer useful. So quite a bit larger than the MNIST digits (28x28) the original GAN was created over. I am also quite new to all of this so any other suggestions are also appreciated.

EDIT: What I have tried so far. I tried making the final output of the Generator B&W by making the output binary (-1 or 1) using this class:

class Binary(nn.Module):

def __init__(self):

super(Binary, self).__init__()

def forward(self, x):

x2 = x.clone()

x2 = x2.sign()

x2[x2==0] = -1.

x = x2

return x

And then I replaced nn.Tanh() with Binary(). It did generate black and white images. But no matter how many epochs, the output still looked random. Using grayscale and nn.Tanh() I do at least see good results.