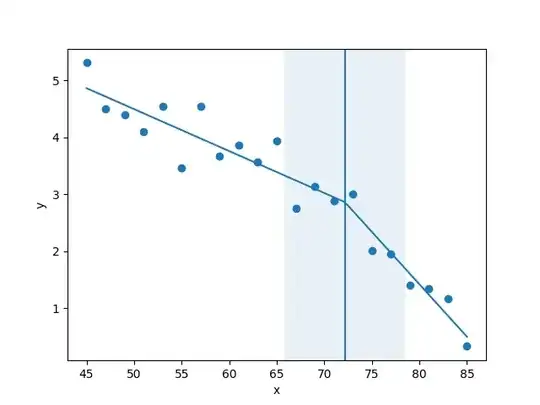

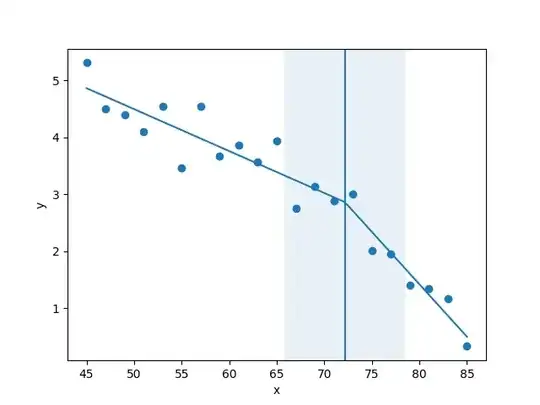

To understand the intuition behind Adagrad, let's have a look at the graphs below, representing the evolution of the loss function when model's weights are updated according to different values of the learning rate in a 1-D search space:

Graph 1 (left - Too Fast!): when updating the weights of the model, we can see that it does not converge to the global minimum. It is due to the fact that the learning rate is too high and it keeps bouncing at the bottom.

Graph 2 (center - Too Slow!): in this case, the weights' update takes place very slowly, because the learning rate is too small, thus it takes too long to converge (or even never) to the global minimum.

Graph 3 (right - Spot On!): in this case, the learning rate is adapted depending on the value of the gradient, i.e. the higher the gradient, the lower the learning rate, or, the lower the gradient, the higher the learning rate. This makes it possible to increase the chances of convergence without having the issues commented before.

Adagrad is one of several adaptive gradient descent algorithms like ADAM, ADADELTA, etc. You can check more info here.

NOTE: the pictures above have been taken from here.