Unless there's a mistake in your code, or the coefficient on $X_1$ is not significant, I'd be inclined to trust the model output.

It's not unusual for data to behave this way. Just because $X_1$ and $Y$ are inversely related with respect to the marginal distribution of $(X_1, Y)$, as can be concluded from a scatterplot of the two variables, does not mean this relationship holds conditional on other variables.

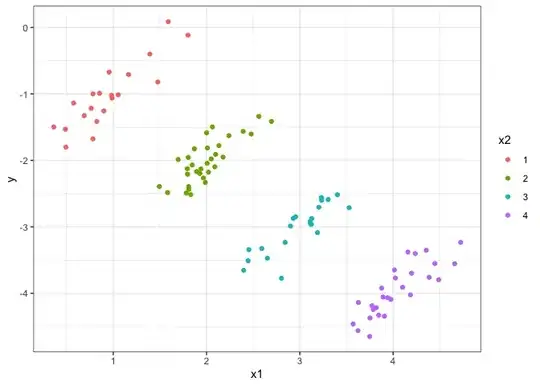

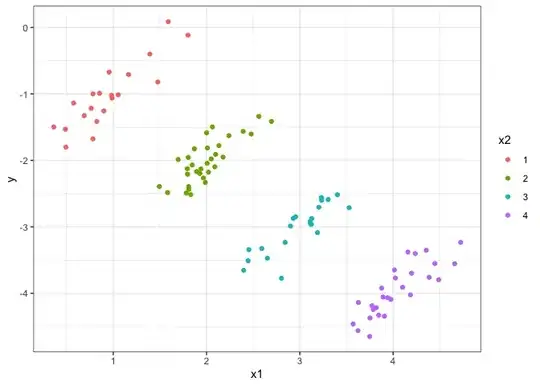

Here is an example where $(X_1, Y)$ are inversely related, but are positively related conditional on another value, $X_2$. (The example is generated using R -- you've tagged python, but this concept is language-agnostic):

library(tidyverse)

library(broom)

set.seed(1)

N <- 100

dat <- tibble(

x2 = sample(1:4, size = N, replace = TRUE),

x1 = x2 + rnorm(N) / 3,

y = x1 - 2 * x2 + rnorm(N) / 5

)

ggplot(dat, aes(x1, y)) +

geom_point(aes(colour = factor(x2))) +

theme_bw() +

scale_colour_discrete("x2")

Here are the outputs of a linear regression model. You'll notice that the coefficient on $X_1$ is negative when $X_2$ is not involved, as anticipated, but is positive when $X_2$ is involved. That's because the interpretation of a regression coefficient is the relationship given the other covariates.

lm(y ~ x1, data = dat) %>%

tidy()

#> # A tibble: 2 x 5

#> term estimate std.error statistic p.value

#> <chr> <dbl> <dbl> <dbl> <dbl>

#> 1 (Intercept) -0.492 0.154 -3.20 1.83e- 3

#> 2 x1 -0.809 0.0549 -14.7 1.33e-26

lm(y ~ x1 + x2, data = dat) %>%

tidy()

#> # A tibble: 3 x 5

#> term estimate std.error statistic p.value

#> <chr> <dbl> <dbl> <dbl> <dbl>

#> 1 (Intercept) 0.0189 0.0540 0.349 7.28e- 1

#> 2 x1 1.04 0.0681 15.3 1.42e-27

#> 3 x2 -2.05 0.0726 -28.2 1.60e-48

Created on 2020-04-27 by the reprex package (v0.3.0)

This concept extends to more than two covariates, as well as continuous covariates.