Why is the regularization parameter not applied to the intercept parameter?

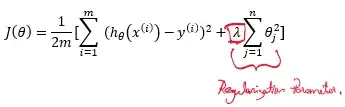

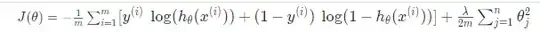

From what I have read about the cost functions for Linear and Logistic regression, the regularization parameter (λ) is applied to all terms except the intercept. For example, here are the cost functions for linear and logistic regression respectively (Notice that j starts from 1):