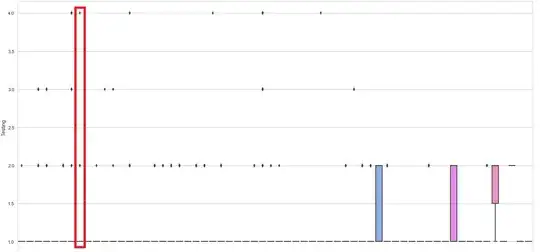

I am trying to interpret this chart.

I am not sure how to interpret this, because, I think that the fact of the for examples LGBM Validation error, is wide and similar to train boxplot, there arent problem of overfitting, but when I see another type of charts of the execution of LGBM, I can see that really the LGBM is overfitted, so really I don't know how to interpret this of the correct way.

But I don't know how could interpret beyond this:

LightGBM is maybe the best option because it is faster and finally you can get enough accuracy with that, and in comparison with the other two, bagging have less overfit because of the differences between the error is less.

Any idea?

Thanks