I am studying machine learning, and I have encountered the concept of bias and variance. I am a university student and in the slides of my professor, the bias is defined as:

$bias = E[error_s(h)]-error_d(h)$

where $h$ is the hypotesis and $error_s(h)$ is the sample error and $error_d(h)$ is the true error. In particular, it says that we have bias when the training set and the test set are not independent.

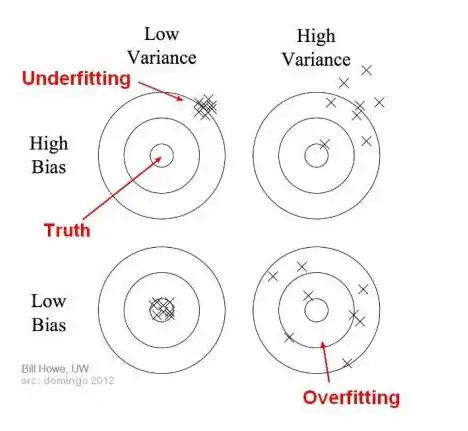

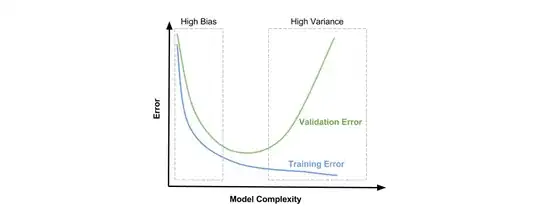

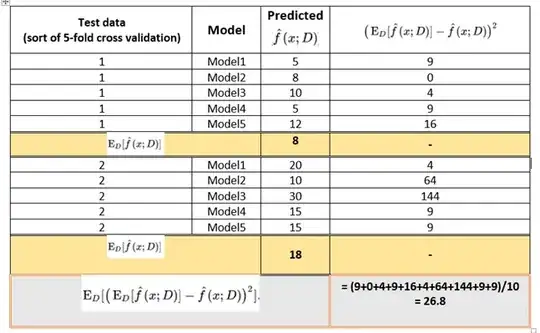

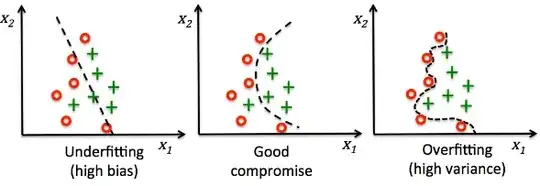

After reading this, I was trying to get a little deeper in the concept, so I searched on internet and found this video , where it defines the bias as the impossibility to capture the true relationship by a machine learning model.

I don't understand, are the two definition equal or the two type of bias are different?

Together with this, I am also studying the concept of variance, and in the slides of my professor it is said that if I consider two different samples from the sample, the error may vary even if the model is unbiased, but in the video I posted it says that the variance is the difference in fits between training set and test set.

Also in this case the definitions are different, why?