I've been trying to follow this paper on Bayesian Risk Pruning. I'm not very familiar with this type of pruning, but I'm wondering a few things:

(1) The paper describes risk-rates to be defined per example. We have $R_k(a_i|x)=\sum\limits_{j=1,j \neq i}^{T_c} L_k(a_i|C_j)p_k(C_j|x)$. $L_k(a_i|C_j)$ is defined to be the loss of an example being predicted in class $C_j$ when the true class is $C_i$. $p_k(C_j|x)$ is the estimated probability of an example belonging to $C_j$.

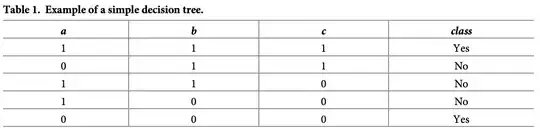

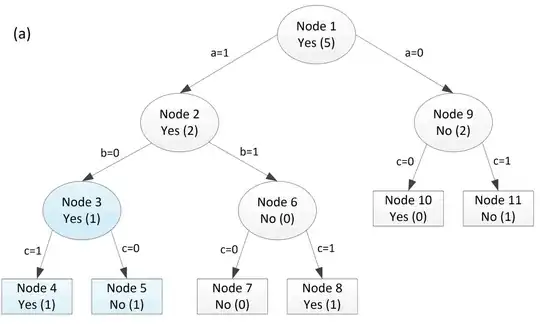

Above is the decision tree that was produced from a C4.5 algorithm. Pruning occurs from left to right, bottom-up. My main question: How are the risk-rates found in the decision tree such as for Node 3?

Above is the decision tree that was produced from a C4.5 algorithm. Pruning occurs from left to right, bottom-up. My main question: How are the risk-rates found in the decision tree such as for Node 3?

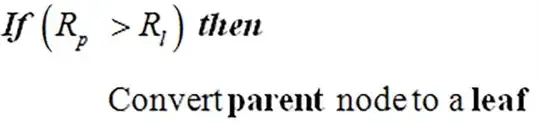

(2) There's also conflicting statements here:

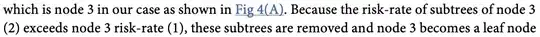

The first image states that if parent risk-rate exceeds the total risk-rate of the leaves, then the parent is pruned to a leaf. However, the second claims that pruning occurs if the leaf risk-rate exceeds the parent. To confirm, if the risk-rate of the parent is less than the risk-rate of the leaves under the subtree of the parent, then I would set the parent to be a leaf?

The first image states that if parent risk-rate exceeds the total risk-rate of the leaves, then the parent is pruned to a leaf. However, the second claims that pruning occurs if the leaf risk-rate exceeds the parent. To confirm, if the risk-rate of the parent is less than the risk-rate of the leaves under the subtree of the parent, then I would set the parent to be a leaf?

(3) From (1), loss would be 0-1 in the binary case. What could be a reasonable loss for multi-class output?

(4) From (1), would estimated probability of $C_j$ be the proportion of $C_j$ in the partitioned output class at a node? For instance, at node 3, we're looking at output = [No].

(5) From (1), would risk-rate be over all training examples?