so I'am doing a logistic regression with statsmodels and sklearn.

My result confuses me a bit. I used a feature selection algorithm in my previous step, which tells me to only use feature1 for my regression.

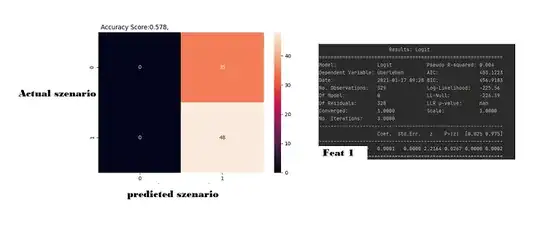

The results are the following:

So the model predicts everything with a 1 and my P-value is < 0.05 which means its a pretty good indicator to me. But the accuracy score is < 0.6 what means it doesn't say anything basically.

Can you give me a hint how to interpret this?

It's my first data science project with difficult data.

My code:

X = df_n_4["feat1"]

y = df_n_4['Survival']

# use train/test split with different random_state values

# we can change the random_state values that changes the accuracy scores

# the scores change a lot, this is why testing scores is a high-variance estimate

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=2)

print(len(y_train)," Testdaten")

# check classification scores of logistic regression

logit_model = sm.Logit(y_train, X_train).fit()

y_pred = logit_model.predict(X_test)

print('Train/Test split results:')

plt.title('Accuracy Score:{}, Variablen: feat1'.format(round((accuracy_score(y_test, y_pred.round())),3)))

cf_matrix = confusion_matrix(y_test, y_pred.round())

sns.heatmap(cf_matrix, annot=True)

plt.ylabel('Actual Szenario');

plt.xlabel('Predicted Szenario');

plt.show()

print(logit_model.summary2())