I'm using the package "datasets". The code I have:

squad_v2 = True

model_checkpoint = "roberta-base"

batch_size = 10

from datasets import load_dataset, load_metric

datasets = load_dataset("squad_v2")

datasets

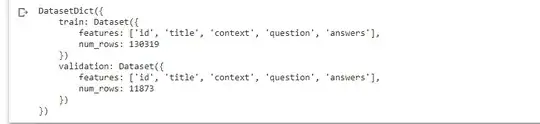

and output:

It's working fine, but as the dataset really huge is a lack of memory on the gpu during the training model. Is there any way how to using "load_dataset" make train and validations splits smaller? But still to have them both on "datasets"?