I'm trying to implement a Naive Bayes classifier, which uses either of hypercubic Parzen window or KNN to estimate a density function.

The data I'm using is Fashion MNIST. The steps I take are that first I zero center the vectorized data and divide it by its column-wise variance, and then I feed this to a PCA and get a 9 dimension vector.

As for the Bayes decisions, for each class of the dataset, I get its samples and train a density estimator to estimate the class conditional densities. Then I multiply it by the class probability, and by taking the index of the maximum probability for each sample, I assign a class to unseen data.

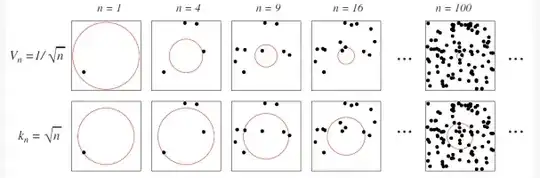

What I'm having a hard time digesting now, is that my Parzen based model works way worse than my KNN. I'm trying to see whether these two converge when I step by step grow my dataset while keeping the volume of hypercube and K of KNN related similar to this:

And while KNN gives decent results, Parzen is somewhat indifferent towards this.

Any explanation will be highly appreciated!